Data Minimization and Purpose Limitation

Data minimization stands as a foundational pillar of modern privacy frameworks. Rather than hoarding vast amounts of information, forward-thinking organizations carefully curate what they collect, ensuring each data point serves a clear, predefined purpose. This disciplined approach creates a smaller attack surface for potential breaches while respecting individual autonomy over personal information. When companies limit their data collection to only what's essential, they not only comply with regulations but also demonstrate respect for their users' digital boundaries.

Operationalizing this principle requires meticulous design of data collection workflows. Thoughtful organizations map out their information needs before launching new initiatives, documenting exactly why each piece of data is required. Such intentional practices build trust while reducing legal and reputational risks associated with data hoarding. The most ethical companies go beyond compliance, regularly auditing their data stores to purge unnecessary information that might otherwise become a liability.

Data Security Measures and Protocols

Modern data protection demands a defense-in-depth strategy that anticipates evolving threats. Encryption transforms sensitive information into unreadable code during transmission and storage, while granular access controls ensure only properly authenticated personnel can view protected data. The most secure organizations implement these measures alongside continuous monitoring systems that detect anomalies in real-time.

Beyond technical controls, human factors play a crucial role in security posture. Comprehensive training programs turn employees into vigilant guardians of data, while simulated phishing tests reinforce security awareness. When breaches occur, organizations with well-rehearsed incident response plans can contain damage more effectively while maintaining stakeholder confidence. These plans should detail communication protocols, forensic investigation procedures, and remediation steps to restore normal operations securely.

Transparency and Accountability

Clear communication about data practices builds bridges between organizations and the individuals whose information they steward. Detailed privacy notices written in plain language empower people to make informed choices about sharing their data. Progressive companies publish transparency reports that disclose government data requests and other disclosures that might affect user privacy.

Accountability mechanisms create internal checks on data handling practices. Designated data protection officers oversee compliance programs, while privacy impact assessments evaluate new projects before launch. When organizations establish clear ownership of privacy responsibilities and conduct regular audits, they demonstrate genuine commitment to ethical data stewardship. This includes maintaining channels for individuals to access, correct, or delete their information as guaranteed by modern privacy laws.

Bias and Fairness in AI Algorithms

Understanding Algorithmic Bias

AI systems often mirror the imperfections of their training data and creators' blind spots. Historical hiring algorithms, for instance, have inadvertently penalized applicants from certain universities based on patterns in past biased decisions. These embedded prejudices become particularly dangerous when deployed at scale, potentially affecting millions of lives through automated decisions.

Recognizing these patterns requires diverse teams examining models through multiple cultural lenses. Some organizations now employ red teams to deliberately stress-test systems for discriminatory outcomes before deployment. This proactive scrutiny helps surface hidden assumptions that could lead to unequal treatment of protected groups.

Mitigating Bias in Data

Thoughtful data curation can dramatically improve algorithmic fairness. Researchers now employ techniques like stratified sampling to ensure adequate representation across demographic groups in training datasets. Some teams supplement real-world data with synthetic examples that address representation gaps while preserving privacy.

The most innovative approaches combine technical solutions with community engagement, consulting affected populations during dataset creation. This participatory design helps surface edge cases and use scenarios that might otherwise be overlooked by homogeneous development teams.

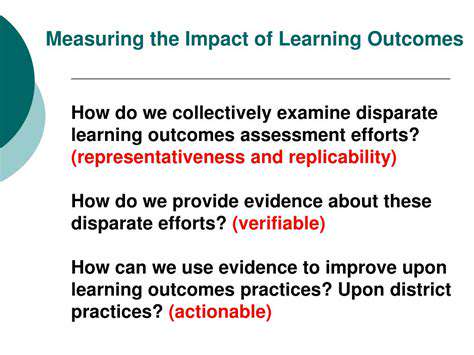

Fairness Evaluation and Metrics

Measuring algorithmic fairness requires multiple quantitative and qualitative assessments. Statistical parity metrics compare outcomes across groups, while individual fairness tests evaluate whether similar cases receive comparable treatment. Sophisticated auditing frameworks now track fairness across different decision thresholds and population segments over time.

Some organizations have begun publishing fairness report cards that disclose performance disparities transparently. These reports often include plans for addressing identified gaps, creating accountability for continuous improvement in algorithmic equity.

Addressing Bias in AI Development Practices

Building fair AI systems requires institutionalizing ethical considerations throughout the development lifecycle. Some companies now mandate bias impact statements for new AI projects, similar to environmental impact assessments. Cross-functional ethics review boards provide crucial oversight, ensuring diverse perspectives inform system design.

Post-deployment monitoring completes the fairness lifecycle, with ongoing performance tracking across user demographics. When disparities emerge, responsible organizations implement mitigation strategies ranging from model retraining to temporary system rollbacks. This commitment to continuous evaluation and improvement reflects the evolving nature of fairness in complex sociotechnical systems.

The Role of Educators and Policymakers in Ethical AI Integration

The Crucial Role of Educators in Shaping Future Generations

Modern educators serve as guides through an increasingly digital landscape, helping students navigate both the opportunities and ethical dilemmas posed by emerging technologies. They cultivate essential digital literacy skills while fostering critical thinking about technology's societal impacts. In AI education, the most effective teachers balance technical instruction with philosophical discussions about fairness, accountability, and human values.

Progressive curricula now include hands-on experiences with algorithmic bias, allowing students to see how data choices affect model outcomes. By making these abstract concepts tangible, educators prepare students to be both skilled users and ethical shapers of technological systems. This dual focus creates a generation capable of harnessing technology's benefits while mitigating its risks.

The Imperative of Policymakers in Creating Equitable Education Systems

Visionary education policies must address both access to technology and protection from its potential harms. Some jurisdictions now mandate AI literacy components in standard curricula while providing funding for teacher professional development in these emerging areas. Forward-thinking legislation also establishes guardrails around educational uses of AI, ensuring tools enhance rather than replace human instruction.

Equity-focused policies recognize the digital divide's persistent effects, allocating resources to ensure all students can participate in technology-enhanced learning. Some innovative programs provide not just devices and connectivity, but also mentorship in digital citizenship. These comprehensive approaches acknowledge that true educational equity in the digital age requires both technological access and the skills to use it responsibly.

Policy frameworks are evolving to keep pace with technological change through regular review cycles and stakeholder engagement processes. Some education agencies now include technologists, ethicists, and youth representatives in advisory committees, ensuring diverse perspectives inform policy decisions. This collaborative approach helps create resilient systems that can adapt to future technological shifts while protecting core educational values.