Ethical Considerations in AI Development

The implementation of artificial intelligence in assessment contexts introduces numerous ethical considerations that require careful attention throughout the technology's lifecycle. Foremost among these concerns is the imperative to establish fairness and eliminate prejudice from algorithmic processes. Systems trained on skewed datasets risk amplifying existing social disparities, potentially leading to discriminatory outcomes in academic evaluation, employment screening, and other critical areas. Mitigating these risks demands rigorous data selection procedures, thoughtful algorithm design, and continuous performance monitoring.

Another significant ethical challenge involves ensuring system transparency and explainability. Many advanced AI models, particularly those utilizing deep learning architectures, function as opaque systems whose decision-making processes remain difficult to interpret. This lack of clarity can undermine confidence in the technology and complicate efforts to identify and correct problematic patterns. The development of explainable AI methodologies represents an essential step toward building public trust and maintaining accountability in automated assessment systems.

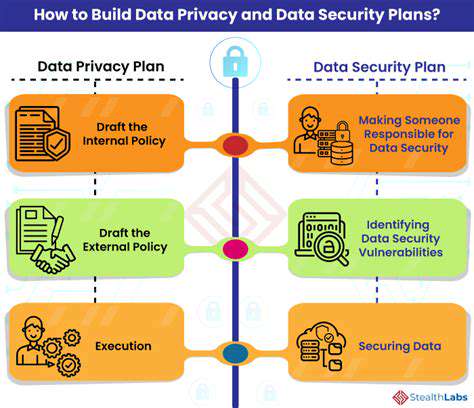

Data Privacy and Security in the AI Age

The growing dependence on automated assessment technologies necessitates robust data protection protocols. AI systems typically require access to extensive datasets for training and operational purposes, raising important questions about the safeguarding of sensitive information. Implementing comprehensive security measures to prevent unauthorized data access and misuse has become a fundamental requirement, necessitating strict compliance with international privacy standards such as the GDPR.

Equally important is ensuring the security of the AI systems themselves. Potential vulnerabilities in these platforms could be exploited by malicious actors seeking to manipulate evaluation outcomes, with potentially serious consequences in educational and professional contexts. Establishing rigorous testing procedures and validation protocols represents a critical safeguard against these threats.

The Impact of AI on Employment and the Workforce

The automation capabilities of AI technology have generated concerns about workforce displacement across multiple sectors. While these systems can dramatically improve productivity and operational efficiency, they may simultaneously reduce demand for certain types of professional roles. Preparing workers for the evolving demands of technology-driven economies has become an essential priority, requiring significant investment in retraining initiatives and skill development programs.

The transition to increasingly automated work environments demands thoughtful consideration of socioeconomic impacts. Policy frameworks that support workforce adaptation and create new employment opportunities will prove essential for ensuring equitable transitions in affected industries.

The Need for Regulation and Governance in AI

The rapid evolution of AI technologies calls for proactive regulatory approaches. Establishing clear standards and guidelines is necessary to ensure responsible development and implementation of these systems. International cooperation among policymakers is particularly crucial for developing consistent, globally applicable regulatory frameworks, preventing the emergence of fragmented or contradictory standards across jurisdictions.

Effective regulations should address multiple aspects of AI implementation, including data protection, algorithmic fairness, and system safety. The creation of independent oversight bodies can help maintain compliance and ensure accountability across the AI development lifecycle.

Societal and Cultural Implications of AI

Artificial intelligence possesses transformative potential that extends to fundamental aspects of human society and culture. Its influence on communication patterns, entertainment consumption, and social interaction continues to grow at an accelerating pace. Understanding the broader societal consequences of AI adoption has become an urgent priority for navigating both the opportunities and challenges presented by these technologies.

Careful examination of AI's impact on social structures, cultural norms, and human values remains essential for responsible implementation. Ensuring equitable access to AI technologies and their benefits represents a critical consideration for maintaining social cohesion during periods of technological transition.

Selecting appropriate furnishings for feline companions begins with accurate size assessment. Considerable variation exists among different breeds and individual animals, as discussed in this pet care resource. Larger specimens typically require more substantial accommodations, including spacious litter areas, durable scratching surfaces, and reinforced carriers. Smaller cats often thrive in more compact environments. When designing living spaces for pets, careful measurement of available area ensures comfortable movement and minimizes stress for animal residents.

The Role of Human Oversight and Collaboration

Ensuring Accuracy and Fairness

Automated evaluation systems, while offering substantial efficiency benefits, continue to require human supervision to maintain accuracy and fairness in assessment. Human readers possess the ability to recognize subtle elements of writing style, argument development, and conceptual understanding that may elude algorithmic detection. This oversight function proves essential for addressing potential biases in the evaluation process and verifying that assessments accurately reflect student comprehension rather than simply conforming to predetermined patterns.

For instance, a student's distinctive voice and perspective might not be fully appreciated by an algorithm, whereas a human evaluator can properly value these creative and insightful contributions. Additionally, human oversight facilitates the identification and correction of training data anomalies, preventing the perpetuation of flawed or prejudiced judgments.

Identifying Complex Reasoning and Critical Thinking

Automated systems typically excel at identifying basic grammatical structures and vocabulary usage but often struggle to evaluate more sophisticated aspects of intellectual engagement, including nuanced argumentation, complex reasoning, and information synthesis. Human evaluation remains indispensable for assessing the depth of understanding and quality of analytical thinking demonstrated in student work.

Human readers can analyze the logical progression of arguments, evaluate evidentiary support, and assess the writer's engagement with alternative perspectives. This evaluation of higher-order cognitive skills constitutes a fundamental component of effective education that cannot be entirely replicated by automated systems.

Addressing Contextual Understanding

Effective essay evaluation frequently requires nuanced interpretation of contextual elements that extend beyond simple factual accuracy. Human readers can discern authorial intent, appreciate cultural references, and understand personal perspectives in ways that current algorithms typically cannot. These limitations in automated systems may lead to incomplete or inaccurate assessments of student work.

For example, proper evaluation of historical analysis or literary interpretation requires contextual understanding that remains challenging for automated systems to achieve. Human oversight ensures that assessments properly account for these important contextual factors.

Facilitating Feedback and Personalized Learning

Human involvement proves invaluable in providing comprehensive student feedback. While automated systems can identify grammatical and structural issues, human evaluators offer more nuanced insights regarding student strengths, weaknesses, and development opportunities. This personalized guidance plays a crucial role in fostering individual academic growth.

Human educators can customize feedback to address specific student needs, providing targeted recommendations that enhance subject matter comprehension. This tailored approach represents a fundamental component of effective personalized learning strategies.

Balancing Efficiency and Depth of Analysis

While automated scoring systems provide significant efficiency advantages, maintaining an appropriate balance between speed and analytical depth remains essential for quality assessment. Human oversight ensures that evaluation processes properly consider the complexities and subtleties of student work, extending beyond superficial characteristics.

Human evaluators can provide holistic assessments that account for overall comprehension, argument quality, and expression clarity. This balanced approach confirms that automated tools serve as supportive rather than replacement mechanisms for human judgment.

Training and Development of AI Systems

The refinement of AI-based evaluation systems depends heavily on human expertise and feedback. Human readers play a critical role in assessing the accuracy and fairness of training data used to develop these algorithms. This iterative improvement process ensures that systems learn effectively from examples while avoiding problematic biases.

Through careful analysis of system outputs and provision of constructive critique, human evaluators contribute to algorithm refinement, leading to progressively more accurate and reliable automated assessment capabilities.

Maintaining Ethical Considerations

As AI-based evaluation systems become more prevalent, ethical considerations assume increasing importance. Human oversight proves essential for ensuring fairness, transparency, and accountability in assessment processes. Biases in training data can produce unfair or inaccurate evaluations, requiring human intervention for proper mitigation.

Additionally, protecting student privacy and confidentiality remains paramount. Human oversight helps ensure responsible handling of student work and prevents potential misuse of sensitive information.