Mastering Time Series Forecasting with Statistical Models

Decoding Time Series Data Patterns

Time series data forms the backbone of modern analytics, capturing sequential observations that reveal hidden patterns. Seasonal fluctuations, cyclical trends, and random noise all contribute to the complex tapestry of temporal data. When examining historical records, analysts can uncover these recurring motifs, enabling them to craft predictive models with remarkable accuracy. The true art lies in distinguishing meaningful patterns from mere statistical noise.

Data granularity dramatically influences analytical approaches. Weekly measurements demand different treatment than quarterly aggregates, while the underlying data generation process often holds the key to effective forecasting. Contextual awareness separates successful forecasts from misleading projections, making domain knowledge as valuable as statistical expertise in this field.

Selecting Optimal Forecasting Models

The model selection process resembles choosing the right tool for a specific task. Simple moving averages might suffice for stable systems, while complex ARIMA structures become necessary for volatile datasets. The critical differentiator lies in the data's stationarity - whether its statistical properties remain constant over time. Seasonality and trend components further complicate this selection matrix, requiring analysts to match model capabilities with data characteristics.

Exponential smoothing shines when handling pronounced trends, whereas SARIMA (Seasonal ARIMA) models excel with periodic fluctuations. The evaluation phase proves equally important, with metrics like MAE and RMSE providing quantitative performance benchmarks. This empirical approach prevents theoretical elegance from overshadowing practical utility in real-world applications.

Precision Parameter Tuning

Model calibration represents the fine-tuning stage where statistical theory meets computational optimization. Maximum likelihood estimation transforms raw data into actionable parameters, but the true challenge lies in avoiding overfitting while maintaining predictive power. Iterative refinement processes must balance mathematical rigor with practical constraints, as perfectly fitted models often fail in production environments.

Diagnostic checks like residual analysis and autocorrelation tests serve as quality control measures. These validation steps ensure parameters capture genuine patterns rather than statistical artifacts. Understanding the limitations of estimation techniques prevents false confidence in model outputs, maintaining scientific integrity throughout the forecasting process.

Rigorous Performance Assessment

Model validation separates academic exercises from operational tools. Error metrics quantify predictive accuracy, but true insight comes from understanding where and why models fail. Backtesting against holdout samples provides reality checks, while visualization techniques expose temporal alignment issues that numeric summaries might miss.

The overfitting paradox - where models memorize training data but fail on new inputs - requires constant vigilance. Robust validation frameworks incorporate multiple testing scenarios, ensuring models generalize beyond their training environments. This comprehensive evaluation approach builds confidence in operational deployments.

Generating Actionable Forecasts

Operational forecasting transforms statistical outputs into business intelligence. Prediction intervals quantify uncertainty, while clear visualization techniques bridge the gap between technical analysis and executive decision-making. The most accurate forecasts prove worthless if stakeholders cannot interpret them effectively.

Effective forecast communication requires balancing technical accuracy with accessibility. Highlighting key assumptions and limitations builds trust in model outputs, while scenario analysis helps organizations prepare for multiple potential futures. This translation from statistical outputs to strategic insights represents the ultimate value of time series analysis.

Real-World Forecasting Applications

From financial markets to industrial operations, temporal forecasting drives modern decision-making. Stock traders analyze price movements, manufacturers optimize production schedules, and economists project GDP trends - all relying on time series methodologies. The unifying thread across applications is the transformation of historical patterns into future insights.

Domain-specific challenges require tailored solutions. Financial models must account for market psychology, while industrial systems grapple with equipment degradation patterns. Successful implementations blend statistical rigor with deep domain expertise, creating forecasting systems that reflect real-world complexities rather than idealized assumptions.

Anomaly Detection and Failure Prediction Systems

Advanced Anomaly Detection Techniques

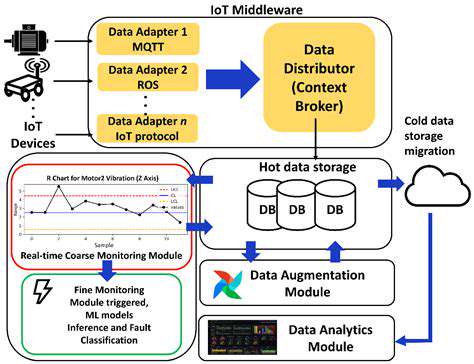

Modern anomaly detection transcends simple threshold alarms. Multivariate analysis reveals complex failure signatures that single metrics might miss, while adaptive algorithms learn evolving normal behavior patterns. The most effective systems combine multiple detection methodologies, creating robust early warning networks.

Time series decomposition separates signal components, exposing anomalies masked by seasonal effects. Machine learning approaches continuously refine detection criteria, adapting to new operational patterns while maintaining sensitivity to genuine faults. This dynamic balance between stability and adaptability defines world-class monitoring systems.

Predictive Failure Modeling

Failure prediction represents the convergence of statistical modeling and mechanical engineering. Prognostic algorithms transform sensor readings into remaining useful life estimates, enabling precision maintenance scheduling. The most advanced systems incorporate physics-based models alongside data-driven approaches, creating hybrid solutions with unparalleled accuracy.

Feature engineering elevates raw data into predictive signals. Vibration harmonics, thermal gradients, and power consumption patterns all contribute to failure signatures. Continuous model retraining ensures predictions reflect current equipment conditions, accounting for wear patterns and environmental changes. This living approach to predictive analytics maximizes equipment availability while minimizing unnecessary maintenance.

Operationalizing Predictive Maintenance

Strategic Implementation Framework

Successful deployments require more than accurate models. Sensor network design determines data quality before analysis even begins, while integration with existing maintenance systems ensures practical impact. The most effective implementations balance technical sophistication with organizational readiness.

Algorithm selection must consider computational constraints alongside accuracy requirements. Edge computing solutions enable real-time analysis where cloud latency proves prohibitive, while hybrid architectures optimize resource utilization. This infrastructure-aware approach prevents theoretical capabilities from outstripping practical implementations.

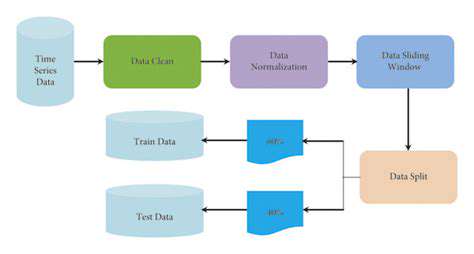

Data Pipeline Optimization

Industrial data rarely arrives analysis-ready. Smart imputation techniques preserve information content while handling missing values, while adaptive filtering maintains signal fidelity amidst noisy industrial environments. The data preparation pipeline often determines ultimate model performance more than algorithm selection.

Metadata management transforms isolated measurements into contextualized insights. Equipment identifiers, operational modes, and environmental conditions all enrich raw sensor data, enabling more nuanced analysis. This contextual layer turns generic algorithms into specialized diagnostic tools.

Model Lifecycle Management

Predictive models degrade as equipment and operations evolve. Continuous performance monitoring triggers timely retraining, while concept drift detection identifies when fundamental relationships change. This proactive approach maintains model relevance throughout asset lifecycles.

Version control and experiment tracking enable systematic improvement. Model governance frameworks ensure changes undergo proper validation before affecting maintenance decisions. This disciplined approach prevents regression while enabling innovation.

Performance Monitoring Systems

Operational dashboards must balance detail with clarity. Condition indicators should trigger alerts only when actionable, avoiding alarm fatigue while ensuring critical issues receive attention. The most effective visualizations guide operators from symptoms to root causes.

Feedback loops close the improvement cycle. Maintenance outcomes should validate predictions and refine future models, creating a virtuous cycle of increasing accuracy. This empirical approach grounds predictive systems in operational reality.

Enterprise Integration Strategies

Predictive insights must flow seamlessly into work management systems. API integrations prevent data silos while maintaining system security, enabling maintenance teams to act on analytics without switching contexts. This frictionless workflow adoption maximizes the value of predictive investments.

Change management ensures organizational alignment. Training programs bridge the gap between data science and field operations, fostering collaborative problem-solving. This cultural integration often determines long-term success more than technical factors.