Quantum Superposition

Quantum superposition is a fundamental concept in quantum mechanics, allowing a quantum system to exist in multiple states simultaneously. This is unlike classical systems, which can only be in one state at a time. Imagine a coin spinning in the air – it's both heads and tails until it lands. Similarly, a quantum particle can exist in a combination of different positions or energy levels until measured.

Understanding superposition is crucial to comprehending the encoding of information in quantum systems. This ability to exist in multiple states simultaneously is a key component of quantum computing, enabling computations that are fundamentally different from classical ones. The possibility of exploring multiple possibilities concurrently is what makes quantum computing potentially so powerful.

Entanglement

Quantum entanglement is a phenomenon where two or more quantum systems become linked in such a way that they share the same fate, regardless of the distance separating them. If you measure a property of one entangled particle, you instantly know the corresponding property of the other, even if they are light-years apart. This bizarre correlation has profound implications for quantum information processing.

Entanglement is a powerful tool for quantum communication and computation. It allows for the creation of highly correlated states, enabling tasks that are impossible with classical systems. Quantum entanglement is essentially a non-local connection that transcends the limitations of classical physics.

Qubit Encoding

Unlike classical bits, which represent information as either 0 or 1, qubits can represent both 0 and 1 simultaneously due to superposition. This allows for the encoding of significantly more information in a smaller space compared to classical systems. This is a key advantage of quantum computing.

The ability to represent both states simultaneously is the foundation of quantum computing's potential for exponential speedup over classical algorithms. Qubits, through superposition and entanglement, can be manipulated to perform calculations that are intractable for even the most powerful classical computers.

Quantum Gates

Quantum gates are analogous to logic gates in classical computing, but they manipulate qubits using quantum mechanical principles. These gates perform operations such as rotations and measurements, enabling the processing of quantum information. The manipulation of qubits through quantum gates is the core of quantum computation.

Quantum gates are fundamental building blocks for quantum algorithms. They control the evolution of quantum states, allowing for the creation of complex computations. Different quantum gates have specific effects on qubits, enabling the design of unique algorithms.

Quantum Algorithms

Quantum algorithms leverage the unique properties of quantum systems to solve problems that are intractable for classical computers. These algorithms, such as Shor's algorithm for factoring large numbers and Grover's algorithm for search, exploit superposition and entanglement to achieve significant speedups. Quantum algorithms have the potential to revolutionize various fields, from cryptography to drug discovery.

These algorithms represent a paradigm shift in computational power. They offer the potential to solve problems that are currently beyond the reach of classical computing, opening doors to entirely new possibilities in various fields of science and technology.

The Path Forward: Building Fault-Tolerant Quantum Systems

Navigating the Challenges of Fault Tolerance

Building fault-tolerant systems is a complex undertaking, requiring a deep understanding of potential failure points and proactive strategies for mitigating them. Careful consideration must be given to the entire system lifecycle, from design and development to deployment and ongoing maintenance. This necessitates a multifaceted approach that encompasses not only the technical aspects but also the organizational and operational processes that support the system.

The challenge lies not just in identifying potential failures, but also in anticipating the cascading effects of those failures. A single point of failure can have ripple effects throughout the entire system, leading to significant disruptions and potentially catastrophic consequences. Therefore, a robust fault-tolerance strategy must encompass measures to prevent, isolate, and recover from these cascading failures. Developing a clear understanding of the operational environment and potential threats is crucial for effective fault tolerance design.

Implementing Robust Fault Tolerance Mechanisms

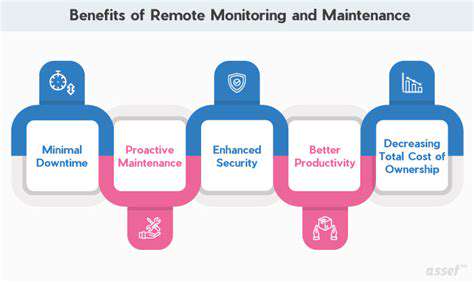

Implementing robust fault tolerance mechanisms requires a combination of redundancy, failover strategies, and monitoring capabilities. Redundancy, in its various forms, is a cornerstone of fault tolerance, ensuring that critical components have backups available to take over in the event of failure. This approach can range from simple replication to complex distributed architectures. Employing failover strategies automatically switches to backup components when primary ones fail, minimizing downtime and ensuring continuous operation. Implementing comprehensive monitoring systems plays a vital role in detecting and diagnosing issues before they escalate into full-blown failures.

Effective monitoring tools provide real-time insights into system health, allowing for proactive interventions and minimizing potential disruptions. Crucially, these systems should facilitate the analysis of historical performance data, enabling the identification of recurring patterns and the development of preventive measures. By proactively identifying and addressing potential bottlenecks and vulnerabilities, the likelihood of system failures can be significantly reduced.

Ensuring Continuous System Availability and Performance

Achieving continuous system availability and performance necessitates a commitment to ongoing maintenance, testing, and optimization. Proactive maintenance schedules, including regular system checks and updates, help prevent minor issues from escalating into major failures. Rigorous testing methodologies, encompassing both unit and integration testing, ensure that fault tolerance mechanisms function as expected under various conditions. Regular performance analysis and optimization, based on real-world usage data, is essential to maintain high levels of system performance and responsiveness, even under heavy loads.

Investing in a comprehensive system monitoring strategy allows for a deep understanding of system health and performance trends, enabling proactive adjustments to optimize availability and performance. Constant vigilance and adaptation are essential to ensure that the system remains robust and resilient in the face of evolving demands and potential threats. The focus should always be on minimizing downtime and maximizing operational efficiency.