Customized Training Protocols

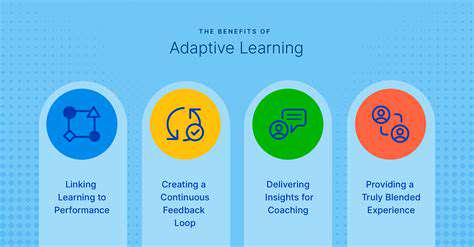

Modern AI neurofeedback systems transcend conventional one-size-fits-all approaches. By decoding individual neural patterns, these platforms craft bespoke training regimens aligned with each user's specific objectives. This precision targeting ensures maximum relevance to the user's unique neurological makeup, dramatically enhancing intervention effectiveness.

Rather than static protocols, the system perpetually refines training parameters based on live neural data. This dynamic optimization creates a feedback loop that intensifies the training's impact, producing more profound neurological changes than traditional methods.

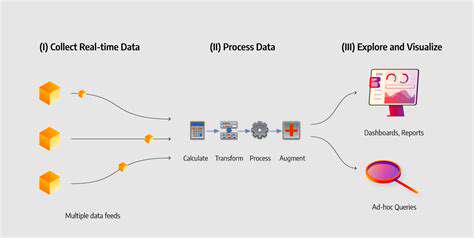

Instantaneous Neural Analysis

The AI's capacity for real-time brainwave interpretation represents a paradigm shift in neurofeedback. Advanced algorithms monitor neural activity continuously, detecting patterns and anomalies as they emerge. This immediate feedback enables users to witness the direct relationship between mental states and brain activity, fostering deeper self-awareness.

This interactive process transforms users from passive recipients to active participants in their neurological development, cultivating an intimate understanding of their mind-brain connection that persists beyond training sessions.

Dynamic Challenge Adjustment

Intelligent neurofeedback platforms automatically calibrate exercise difficulty based on user performance. This adaptive mechanism maintains optimal engagement levels - challenging enough to promote growth but never frustrating enough to discourage continued practice. Such precision in challenge scaling proves crucial for long-term adherence and consistent progress.

The system's learning capability allows it to map individual response patterns, adjusting task complexity with surgical precision. This ensures users remain in their optimal learning zone, maximizing therapeutic benefits while minimizing discouragement.

Enhanced Training Efficiency

AI automation streamlines the neurofeedback process considerably. By handling data collection, analysis, and feedback delivery automatically, the technology liberates both users and therapists to focus on the therapeutic process itself. This optimization can dramatically reduce the time required to achieve meaningful results, making neurofeedback more accessible to time-constrained individuals.

The system's rapid analytical capacity enables instantaneous protocol adjustments, ensuring the intervention remains sharply focused on the user's evolving needs. This eliminates wasted time on ineffective approaches, maximizing the return on investment for participants.

Synergy with Complementary Therapies

AI-enhanced neurofeedback naturally complements other therapeutic modalities. The detailed neural insights it provides can inform adjustments to cognitive behavioral therapy, mindfulness training, and other interventions. This integrative approach represents the future of personalized mental health care, where technological and traditional methods work synergistically.

For instance, a therapist might use AI-generated neural profiles to refine CBT strategies for emotional regulation, creating a truly customized treatment plan. Such multidimensional approaches promise more comprehensive and lasting therapeutic outcomes.

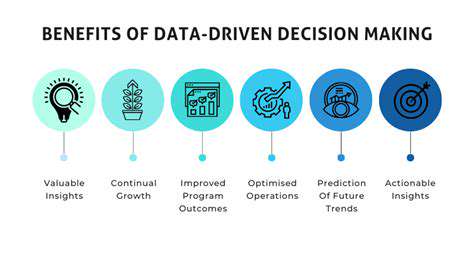

Advanced Data Analysis and Predictive Modeling

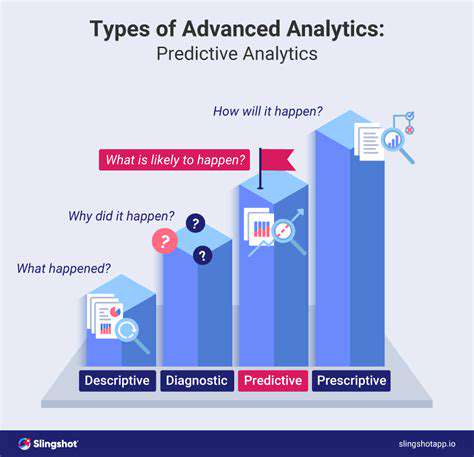

Cutting-Edge Predictive Techniques

Predictive modeling represents the vanguard of data analysis, employing sophisticated algorithms to forecast outcomes based on historical patterns. These models train on comprehensive datasets containing both input variables and target outcomes, learning their complex interrelationships. Various methodologies - including regression analysis, classification systems, and clustering techniques - are deployed according to the specific analytical challenge. Mastering the nuances of each technique proves essential for constructing reliable predictive frameworks.

Model selection carries tremendous weight, as inappropriate choices inevitably yield flawed predictions and unreliable insights. Decision-making must account for data characteristics, desired precision levels, and available computational resources. Factors like outlier presence, missing values, and data linearity critically influence performance and demand careful preprocessing attention.

Data Refinement Process

Data preparation forms the critical foundation of advanced analytics. This meticulous process involves cleansing, transforming, and structuring data for modeling applications. Key steps include handling incomplete records, addressing statistical anomalies, and converting categorical data into numerical formats. These preparatory measures ensure the integrity and reliability of the analytical foundation.

Feature engineering represents an artful dimension of data science, creating novel variables from existing ones to enhance model performance. This creative process, grounded in domain expertise, often reveals hidden relationships that significantly boost predictive accuracy.

Model Assessment Protocols

Rigorous evaluation safeguards predictive model quality. Performance metrics like accuracy rates, precision scores, recall values, and F1-measures quantify a model's predictive prowess. Contextual understanding of these metrics proves vital for meaningful interpretation and application.

Validation techniques such as cross-validation assess generalization capacity by testing models against unseen data partitions. This crucial step prevents overfitting - when models memorize training data rather than learning generalizable patterns. Proper validation produces models that perform reliably in real-world applications.

Large-Scale Data Challenges

Big data analytics requires specialized methodologies beyond conventional approaches. The three Vs - volume, velocity, and variety - necessitate distributed computing frameworks and parallel processing architectures. These advanced systems can manage the scale and complexity of modern datasets.

Scalability considerations permeate every design decision. Models and pipelines must accommodate exponential data growth without performance degradation, often leveraging cloud infrastructure or specialized hardware to maintain efficiency.

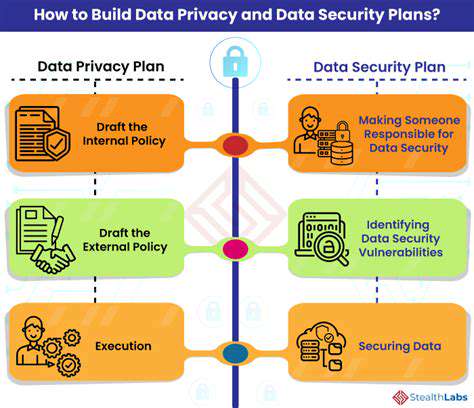

Ethical Analytical Practices

Ethical imperatives loom large in data analysis, particularly with sensitive information. Justified data usage requires acknowledging and addressing potential biases. Maintaining transparency throughout the analytical process builds essential trust and accountability.

Data security and privacy protections are non-negotiable. Compliance with regulations like GDPR ensures responsible handling of personal information. Strict data governance policies must prevent misuse while enabling beneficial applications. Ethical considerations should guide every phase from collection through analysis to implementation.

Operational Deployment Strategies

Model deployment integrates predictive capabilities into operational environments for real-time application. This phase demands careful infrastructure planning to support continuous model operation at scale.

Ongoing performance monitoring is equally critical. Tracking key metrics allows for timely adjustments as data patterns evolve. This maintenance ensures models remain accurate and relevant despite changing conditions in the operational environment.

Ethical Considerations and Future Directions

Commitment to Transparency

Research transparency builds essential public trust and ensures ethical rigor. Full disclosure of methodologies, datasets, and findings enables scrutiny and replication, establishing accountability standards. This openness helps identify potential biases while validating research conclusions. Public access to information fosters broader engagement with the implications of scientific progress.

Bias Mitigation Strategies

Conscious efforts must counteract unconscious biases that can influence research design and interpretation. Proactive bias identification and correction ensures equitable outcomes across diverse populations. This requires critical examination of assumptions and incorporation of multiple perspectives throughout the research lifecycle.

Participant diversity deserves particular attention to avoid reinforcing existing inequalities. Intentional recruitment strategies that reflect population diversity yield more robust, generalizable results.

Equitable Benefit Distribution

Research advancements should serve all societal segments equally. Targeted initiatives must bridge accessibility gaps in technology and knowledge dissemination. Practical applications should prioritize underserved communities to maximize societal benefit.

Intellectual Property Balance

The tension between innovation protection and knowledge sharing presents ongoing challenges. While intellectual property rights incentivize discovery, they must not obstruct access to transformative technologies. Finding equilibrium between these competing priorities remains crucial for sustainable progress.

Privacy Safeguards

Participant confidentiality constitutes an ethical imperative. Clear communication about data practices empowers individuals to make informed participation decisions. Strict adherence to privacy regulations maintains public confidence in research institutions.

Responsible Technological Development

Rapid innovation necessitates careful impact assessment. Comprehensive evaluation of potential societal consequences - both positive and negative - should guide technological advancement. Inclusive stakeholder engagement ensures balanced development trajectories that serve collective interests.

Accountability Frameworks

Robust oversight mechanisms safeguard research integrity. Independent review boards and ethical guidelines help identify and resolve concerns throughout the research process. Transparent accountability practices maintain public trust in scientific endeavors.