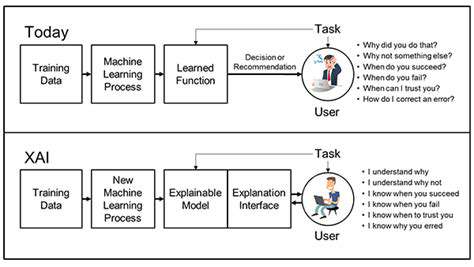

Understanding the Need for XAI

Explainable AI (XAI) is crucial in the development of ethical AI systems because it addresses the significant concern of transparency and accountability. AI models, particularly complex deep learning algorithms, often operate as black boxes, making it difficult to understand how they arrive at their decisions. This lack of transparency poses challenges in identifying and mitigating potential biases, ensuring fairness, and building trust in AI systems. XAI techniques aim to shed light on the inner workings of these models, allowing developers and users to comprehend the reasoning behind AI decisions, ultimately fostering confidence and responsible deployment.

Without XAI, the potential for unintended consequences and unfair outcomes increases. Imagine an AI system used for loan applications discriminating against certain demographics without human intervention. This lack of explainability makes it nearly impossible to identify and rectify the underlying bias. XAI methods provide a crucial tool for scrutinizing the decision-making process, enabling the detection and correction of such biases, ultimately promoting equitable and just outcomes.

XAI Techniques and Methods

A variety of techniques contribute to XAI, each offering a unique perspective on how AI arrives at its conclusions. These methods range from simple explanations, like highlighting the features most influential in a classification decision, to more sophisticated techniques like counterfactual explanations, which illustrate how a different input might lead to a different output. Furthermore, visualization tools play a significant role in XAI, helping to portray the decision-making process in an understandable format for humans. These methods collectively aim to unpack the complexity of AI models, making their reasoning accessible and transparent.

One prominent XAI technique is the use of rule-based systems to explain AI decisions. These systems provide a clear, step-by-step explanation of the reasoning behind a specific prediction. Another method involves using attention mechanisms in neural networks, which highlight the parts of the input data that the model focused on when making its decision. By employing a combination of these techniques, we can gain a comprehensive understanding of how AI models function, paving the way for more ethical and responsible AI development.

XAI in Different AI Applications

Explainable AI is becoming increasingly relevant across a broad range of applications. In healthcare, XAI can help doctors understand how AI systems diagnose diseases, leading to more informed treatment plans and improved patient outcomes. In finance, XAI can provide insights into how AI models assess risk, enabling better risk management and financial decision-making. Furthermore, in autonomous vehicles, XAI can be used to explain the decision-making process of the vehicle, fostering trust and safety in these systems. The applicability of XAI is expanding, impacting various sectors and highlighting its vital role in ensuring ethical AI practices.

The use of XAI in autonomous systems is particularly critical. By understanding how an autonomous vehicle makes a decision, such as choosing to brake or steer in a specific situation, we can evaluate its safety and reliability. This transparency is essential for building trust in these systems, which are increasingly integrated into our daily lives. Moreover, XAI can help identify potential biases or errors in the system's decision-making process, allowing for proactive adjustments and improvements.

Ethical Considerations in XAI

While XAI offers significant benefits, it's essential to consider the ethical implications of its implementation. The complexity of some XAI methods can introduce new challenges, potentially obscuring rather than clarifying the decision-making process. Careful consideration must be given to the potential for misuse of XAI, such as manipulating or misrepresenting explanations for malicious purposes. The development of robust ethical guidelines and standards for the use of XAI is paramount. This includes ensuring fairness, transparency, and accountability in the creation and application of XAI techniques, thus preventing any negative consequences from their deployment.

Another crucial ethical consideration is the potential for bias amplification. While XAI can help identify biases in AI models, if the underlying data used to train the model contains biases, the explanations generated by XAI may perpetuate those biases. Therefore, it is important to address the biases in the data itself to ensure that the explanations produced by XAI are not misleading or discriminatory. This necessitates a holistic approach that considers both the data and the methods employed in XAI.

Challenges and Future Directions

Understanding the Need for Explainability

Explainable AI (XAI) is crucial in building ethical AI systems because it allows us to understand how AI models arrive at their decisions. This transparency is essential for building trust in AI systems and ensuring that they are used responsibly. Without understanding the reasoning behind an AI's output, it becomes difficult to identify potential biases, errors, or unintended consequences. This lack of explainability can lead to unfair or discriminatory outcomes, undermining the very principles of ethical AI.

Furthermore, understanding the internal workings of an AI model helps in debugging and improving its performance. If we know *why* a model is making a particular decision, we can identify and address any flaws in its training or logic, ultimately leading to a more reliable and accurate AI system.

Addressing Algorithmic Bias

AI models are trained on data, and if that data reflects existing societal biases, the AI system will likely perpetuate those biases. XAI plays a vital role in detecting and mitigating these biases. By understanding the factors contributing to a model's predictions, we can identify and address the underlying biases within the training data. This is crucial for ensuring fairness and avoiding discrimination in AI applications.

Techniques such as fairness-aware learning and bias detection algorithms can be integrated with XAI methods to proactively identify and reduce bias in the decision-making process of AI systems. This proactive approach is critical for creating AI systems that are equitable and just.

Ensuring Robustness and Reliability

AI systems must be robust and reliable to ensure they operate correctly and consistently in various contexts. Lack of explainability can obscure vulnerabilities and unexpected behaviors. XAI helps us understand the conditions under which an AI model might fail, potentially leading to catastrophic consequences in safety-critical applications. By scrutinizing the reasoning process of the AI, we can identify weaknesses and improve its resilience to external factors.

Overcoming the Complexity of Deep Learning Models

Deep learning models, particularly neural networks, are often perceived as black boxes. This complexity makes it difficult to understand how these models arrive at their decisions. XAI methods are crucial for demystifying these complex models. Approaches such as visualizing learned representations, attention mechanisms, and saliency maps help shed light on the internal workings of deep learning models, allowing us to gain insight into their decision-making process.

Developing Trust and Acceptance in AI Systems

Building trust in AI systems is essential for their widespread adoption and successful integration into various aspects of our lives. XAI plays a critical role in fostering trust by providing transparency and accountability. When users understand how an AI system functions, they are more likely to accept and trust its outputs. This trust is paramount for ethical and responsible AI adoption, particularly in high-stakes applications like healthcare and finance.

Evaluating and Monitoring AI Systems

Continuous evaluation and monitoring are crucial for ensuring AI systems remain aligned with ethical guidelines and societal values. XAI provides the necessary tools for this ongoing evaluation. By understanding how AI models make decisions, we can identify deviations from expected behavior or potential biases that may emerge over time. This ongoing monitoring is essential for maintaining the integrity and trustworthiness of AI systems throughout their operational lifespan.