Defining Ethical Boundaries in AI-Powered Learning Environments

Establishing Clear Guidelines

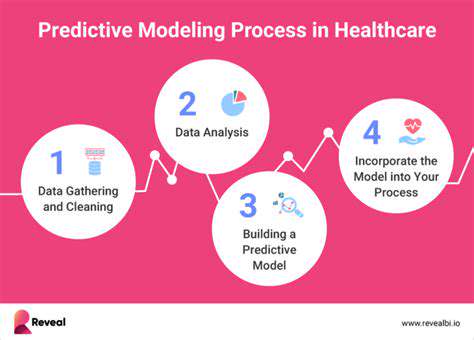

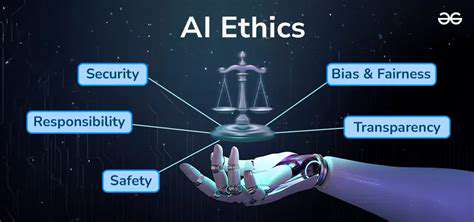

When we talk about setting ethical limits for artificial intelligence in education, we're addressing one of the most pressing challenges in modern pedagogy. The creation of thorough guidelines isn't just recommended – it's absolutely necessary for responsible innovation. These frameworks must cover every stage of AI implementation, starting from how we gather student data to how we design algorithms that genuinely support learning without causing harm.

What many educators don't realize is that these guidelines serve as more than just rules. They represent our collective commitment to developing technology that respects human dignity while enhancing educational outcomes. Without them, we risk creating systems that might inadvertently reinforce harmful stereotypes or make decisions that negatively impact students' academic journeys.

Ensuring Transparency and Accountability

Transparency isn't merely a buzzword in educational AI – it's the foundation of trust between technology and its users. Students, teachers, and parents all deserve to understand exactly how an AI system reaches its conclusions about learning paths, assessments, or recommendations.

Accountability presents an even greater challenge. When an AI tool makes a mistake in evaluating student work or recommending inappropriate content, we need crystal-clear procedures for identifying responsibility and implementing corrections. This includes establishing straightforward channels for addressing concerns and making necessary adjustments to the system.

Addressing Bias and Fairness

The issue of bias in educational AI deserves particular attention because these systems learn from historical data that may reflect societal prejudices. We must implement rigorous procedures to examine training data for hidden assumptions that could disadvantage certain student groups.

True fairness means going beyond surface-level equality – it requires actively designing systems that account for different learning styles, cultural backgrounds, and individual circumstances. This isn't a one-time fix but an ongoing commitment to regular system audits and updates as we better understand how different students interact with AI tools.

Promoting Human Oversight and Control

Despite AI's impressive capabilities, human judgment remains irreplaceable in education. Teachers must always maintain the ability to override AI recommendations when they conflict with professional judgment about what's best for a particular student. This delicate balance between technological assistance and human expertise defines ethical AI implementation in schools.

Investing in teacher training becomes crucial here. Educators need support to develop the skills required to effectively supervise AI tools while maintaining their central role in the learning process. Only through such professional development can we ensure technology enhances rather than replaces the human elements of teaching.

Addressing Bias in AI Algorithms for Educational Applications

Understanding Bias in AI

Bias in educational AI doesn't originate in the technology itself but rather in the information we feed it. Consider how historical performance data might unintentionally reflect societal inequities – an AI trained on such information could perpetuate these patterns unless we intervene.

Data Collection and Representation

Examining our data sources critically represents the first line of defense against biased algorithms. Are we collecting information from diverse student populations? Have we considered how our measurement tools themselves might introduce distortion?

Algorithmic Design and Evaluation

Creating fair algorithms requires intentional design choices from the outset. We need evaluation methods that look beyond overall accuracy to examine how different student groups experience the system's outputs.

Ethical Frameworks for AI in Education

Developing comprehensive ethical guidelines helps institutions navigate the complex landscape of educational AI. These documents should address not just legal compliance but fundamental questions about the kind of learning environments we want to create.

Addressing Bias in Specific Educational Applications

Different AI tools demand tailored approaches. Adaptive learning platforms need safeguards against reinforcing stereotypes, while automated grading systems require scrutiny of their evaluation criteria for hidden biases.

Transparency and Explainability in AI Models

When students and teachers can understand an AI's decision-making process, they're better equipped to identify and address potential issues. Explainable AI techniques help demystify these complex systems while building trust in their applications.

Protecting Student Privacy and Data Security in AI Educational Systems

Protecting Student Data in Educational Settings

Student privacy protection extends far beyond regulatory compliance. It's about creating an environment where learners feel safe to explore, make mistakes, and grow without fear of inappropriate data exposure. This requires implementing security measures that evolve alongside emerging threats.

Legal and Ethical Considerations

While laws like FERPA provide essential frameworks, ethical data practices often go further than legal minimums. True commitment to student privacy means regularly reviewing and updating policies as technology and societal expectations change.

Data Minimization and Purpose Limitation

A key principle often overlooked is collecting only what's absolutely necessary. By limiting data collection to essential educational purposes, we automatically reduce privacy risks while maintaining system effectiveness.

Data Security Measures

Robust security isn't just about technology – it's about people. Regular training ensures all community members understand their role in protecting sensitive information, creating a culture of shared responsibility.

Data Breach Preparedness and Response

Having a well-practiced response plan demonstrates an institution's seriousness about data protection. Preparing for potential breaches before they occur minimizes harm when incidents do happen.

Transparency and Communication

Clear, accessible privacy policies build trust with students and families. When institutions openly explain their data practices, they empower stakeholders to make informed decisions about technology use.

Student Involvement and Empowerment

Teaching students about data privacy transforms them from passive subjects to active participants in their own protection. This educational approach creates lasting habits that serve them beyond the classroom.

Promoting Student Agency and Autonomy in AI-Driven Educational Environments

Cultivating a Growth Mindset

Developing resilience becomes particularly important in AI-enhanced learning. When students view challenges as opportunities, they're better equipped to use AI tools effectively while maintaining their academic independence.

Defining and Delimiting AI's Role

Clarifying what AI can and can't do prevents overreliance on technology. Students should understand these systems as assistants rather than replacements for their own thinking and effort.

Providing Choice and Control Over Learning Paths

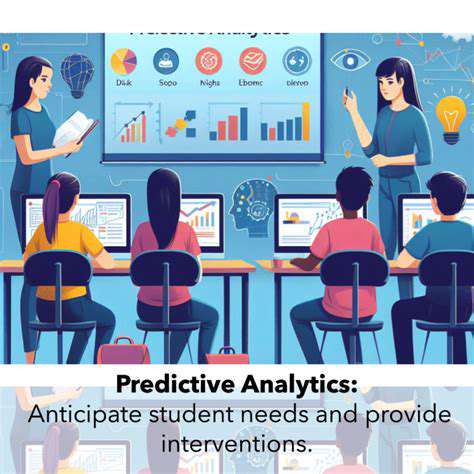

Personalized learning works best when students help direct their journeys. AI recommendations should inform rather than dictate educational choices, preserving learner autonomy.

Promoting Critical Thinking and Evaluation Skills

In an age of AI-generated content, analytical skills become more valuable than ever. Teaching students to question and verify information prepares them for lifelong learning in technology-rich environments.

Designing Collaborative Learning Experiences

AI can facilitate meaningful peer interactions when designed thoughtfully. The best systems enhance rather than replace the social dimensions of learning that are crucial for development.

Ensuring Equity and Access for All

Technological advancement mustn't create new divides. Careful implementation ensures all students benefit from AI tools regardless of their background or circumstances.