Introduction to AI in Educational Program Assessment

Defining AI in Educational Assessment

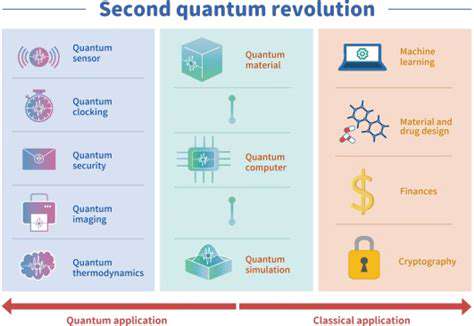

Artificial intelligence (AI) is rapidly transforming various sectors, and education is no exception. In the context of educational program assessment, AI refers to the use of intelligent systems, algorithms, and machine learning models to automate, analyze, and interpret data related to student learning and program effectiveness. This encompasses a broad range of applications, from automated grading of multiple-choice tests to sophisticated analyses of student interactions within online learning platforms, ultimately aiming to provide more insightful and objective evaluations of educational programs.

Automated Grading and Feedback

One of the most impactful applications of AI in education is automated grading. AI-powered tools can quickly and accurately grade objective assessments like multiple-choice questions, short-answer responses, and even some forms of coding assignments. This frees up educators' time, allowing them to focus on providing more personalized feedback and support to students. Furthermore, AI can provide immediate feedback, accelerating the learning process and allowing students to identify and correct errors in real-time.

Beyond simple grading, AI can also analyze student work to provide more nuanced and comprehensive feedback. For example, AI can identify patterns in student errors, suggesting areas where students need additional support or remediation.

Analyzing Student Performance Data

AI algorithms can analyze vast amounts of student performance data, going beyond individual grades to uncover patterns and trends in student learning. This data can be drawn from various sources, including learning management systems, assessments, and even student interactions within online discussions. By analyzing this data, educators can identify areas where students are struggling, pinpoint specific learning gaps, and adjust instructional strategies accordingly. The goal is to create a more personalized and effective learning experience for every student.

Improving Program Evaluation

AI can significantly enhance the evaluation of entire educational programs. By analyzing student performance data across multiple courses and programs, AI can identify strengths and weaknesses within the curriculum, teaching methods, and learning resources. This data-driven approach allows for more objective and comprehensive evaluations, leading to evidence-based improvements in program design and implementation. Moreover, AI can help identify which programs or interventions are most effective in promoting student success.

Personalized Learning Pathways

AI algorithms can personalize learning pathways for students, tailoring the learning experience to individual needs and learning styles. By analyzing student performance data, AI can recommend specific learning materials, activities, and resources that best suit a student's current understanding and learning pace. This personalized approach can lead to improved student engagement, higher achievement levels, and a more effective use of educational resources.

Ethical Considerations in AI-Driven Assessment

While AI offers significant potential for improving educational program assessment, there are critical ethical considerations that must be addressed. Ensuring fairness and equity in AI-driven assessment is paramount. Bias in the data used to train AI models can lead to discriminatory outcomes, potentially disadvantaging certain student groups. Transparency in how AI systems make decisions is also essential, allowing educators to understand the reasoning behind the assessments and providing the opportunity for human oversight. Furthermore, safeguarding student privacy and data security is crucial in this evolving landscape.

Analyzing Student Performance Data with AI

Data Collection and Preparation

Effective analysis of student performance data hinges on meticulous data collection and preparation. This involves gathering relevant information from various sources, including standardized test scores, classroom assessments, attendance records, and even self-reported student feedback. Careful consideration must be given to the quality and completeness of the data, ensuring accuracy and minimizing potential biases. Data preparation encompasses cleaning, transforming, and organizing the collected information into a format suitable for analysis, often involving the use of spreadsheets or specialized data management software. This stage is crucial, as inconsistencies or errors in the data can significantly skew the results and lead to inaccurate conclusions about student performance.

A critical aspect of data preparation is identifying and handling missing data points. Strategies for dealing with missing values, such as imputation or exclusion, need to be carefully considered, as these choices can affect the validity of the analysis. Furthermore, defining clear criteria for categorizing and grouping student data is essential for meaningful interpretation. This might involve creating performance levels, identifying specific subject areas, or differentiating between different student demographics.

Statistical Analysis Techniques

Various statistical analysis techniques can be applied to student performance data to gain valuable insights. Descriptive statistics, such as mean, median, and standard deviation, can provide a summary of the overall performance distribution, highlighting central tendencies and variability. This information can be useful for understanding the general performance trends of the student population. Furthermore, these insights can be used to identify areas where students may be struggling or excelling.

Beyond descriptive statistics, inferential statistics can be employed to explore relationships between different variables, such as student performance and factors like socioeconomic status or classroom engagement. Regression analysis, for instance, allows researchers to investigate how changes in specific factors correlate with changes in student performance. This can help determine the influence of particular variables on student outcomes, enabling the identification of potential areas for intervention or improvement. Regression analysis or similar tools can also reveal the significance of these correlations.

Correlation analysis is another powerful tool for uncovering potential relationships between different variables. By examining the correlation coefficients, researchers can determine the degree to which these variables are associated and whether these relationships are positive or negative. Correlation analysis can be a significant step in determining which variables might have the most influence on student performance.

Interpreting and Visualizing Results

Once the statistical analysis is complete, the results need to be interpreted in the context of the research questions and objectives. This involves drawing conclusions about the patterns and trends revealed by the data, and articulating these conclusions clearly and concisely. Understanding the limitations of the data and the potential sources of bias is also crucial for a robust interpretation. For example, if the sample size is small or the data collection method has limitations, it's essential to acknowledge these factors when interpreting the results.

Visual representations of the data, such as charts and graphs, can significantly enhance the understanding and communication of findings. These visualizations can help to highlight key trends, patterns, and outliers in the data more effectively than tables of numbers alone. Clear and informative visuals can communicate complex statistical analyses to a wider audience, including educators, policymakers, and parents. Effective use of charts and graphs facilitates a more accessible and impactful understanding of the data.

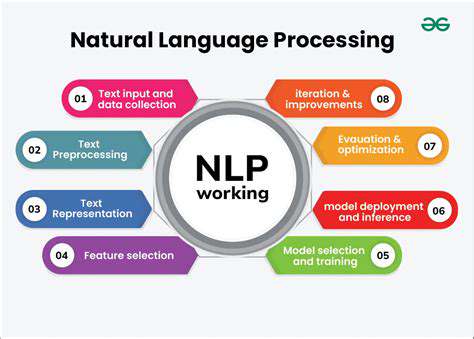

Evaluating Program Effectiveness Through Natural Language Processing (NLP)

Understanding Program Goals and Objectives

A crucial first step in evaluating program effectiveness is a thorough understanding of the program's goals and objectives. These should be clearly defined and measurable, outlining what the program intends to achieve and how success will be determined. Precisely defining these outcomes allows for a focused evaluation process, ensuring that the evaluation aligns with the program's intended impact. This initial step involves a careful review of the program's design documents, stakeholder input, and any existing data about the target population.

Beyond simply listing objectives, the evaluation should delve into the *why* behind these goals. What are the underlying theories and assumptions driving the program's design? Understanding these theoretical underpinnings is critical to interpreting the evaluation results. If the program's theoretical basis is flawed, the evaluation results may not accurately reflect the true effectiveness of the program's approach.

Measuring Program Outcomes and Impacts

Effective evaluation requires the development of metrics that accurately measure program outcomes and impacts. These metrics should be directly tied to the program's goals and objectives, ensuring that the data collected is relevant and meaningful. Quantifiable data, like participation rates, changes in knowledge or skills, or improvements in specific behaviors, are essential for demonstrating the program's impact. Qualitative data, such as participant feedback and observations, can complement quantitative data, providing a richer understanding of the program's effectiveness.

Choosing appropriate data collection methods is essential. Surveys, interviews, focus groups, and observations can be utilized to gather relevant data. Careful consideration must be given to the reliability and validity of the data collection instruments. Data collection methods must be chosen strategically and implemented meticulously to produce reliable and valid results, which can be used to make informed conclusions and recommendations. Consideration should also be given to ethical considerations when collecting data, particularly from vulnerable populations.

Determining appropriate timeframes for data collection is also critical. A short-term evaluation may only capture the immediate effects of the program, while a long-term evaluation can provide a more comprehensive view of the program's lasting impact. The selection of appropriate timeframes needs careful consideration to accurately assess the program's long-term effectiveness.

Analyzing Data and Reporting Findings

Once data is collected, it needs to be carefully analyzed to identify trends and patterns. Statistical analysis can help to determine if the program has achieved its objectives and whether any changes in outcomes are statistically significant. This analysis should consider potential confounding factors that might influence the results. Interpreting the data requires careful consideration of the context in which the program was implemented.

The evaluation report should clearly and concisely present the findings of the analysis. The report should include visualizations of data, such as charts and graphs, to make the information easily digestible. Clear communication of findings is essential for stakeholders to understand the evaluation results and for program improvement. The report should also include recommendations for improving the program based on the evaluation findings.

This phase involves the critical task of identifying any limitations in the evaluation process. Were there factors that could have influenced the results that weren't accounted for? Acknowledging these limitations adds a layer of credibility to the evaluation and helps to inform future planning and program adjustments. Thorough documentation of the evaluation process and limitations is essential for transparency and for informing future evaluations.

Identifying Key Performance Indicators (KPIs) and Measuring Impact

Choosing Relevant KPIs for AI in Education

Selecting the right Key Performance Indicators (KPIs) is crucial for evaluating the impact of AI in education. Instead of focusing on generic metrics like student enrollment, it's more beneficial to pinpoint metrics that directly reflect AI's role in enhancing learning outcomes. For example, measuring the improvement in student engagement through interactive learning platforms powered by AI is a more pertinent KPI than simply tracking the number of students using the platform. This shift in focus allows for a more nuanced understanding of AI's effectiveness in fostering deeper learning and improving student experience, rather than just superficial participation rates.

Another important KPI to consider is the reduction in teacher workload. AI can automate tasks like grading assignments, providing personalized feedback, and creating lesson plans, freeing up teachers to focus on more complex aspects of student development. Quantifying the time saved by teachers through AI-powered tools and analyzing how that time is re-allocated to activities like individual student support and curriculum development provides a concrete measure of AI's contribution to educational efficiency and the quality of student interactions with teachers.

Measuring the Impact of AI on Student Outcomes

Assessing the impact of AI on student outcomes necessitates a multi-faceted approach. Beyond traditional assessments, it's essential to evaluate how AI tools are enhancing critical thinking skills, problem-solving abilities, and adaptability. This involves examining student performance on tasks requiring higher-order cognitive skills and tracking their progress in applying these skills in real-world scenarios. Analyzing how AI-driven personalized learning paths contribute to improved academic performance and a deeper understanding of subject matter is crucial for measuring the long-term benefits of AI in education.

Another critical aspect of measuring impact is analyzing student feedback on their learning experience. AI-powered platforms can collect data on student satisfaction and identify areas where the AI system might be improving or falling short. Gathering qualitative insights, such as student testimonials and feedback surveys, complements quantitative data from assessments and helps provide a more holistic picture of how AI tools are affecting the overall student experience. This holistic approach, combining various metrics, provides a more complete understanding of the impact of AI on student learning and well-being.

Tracking student retention rates in specific subjects or programs is also important. If AI-driven tools are effectively tailoring learning experiences to individual student needs, this should manifest in improved engagement and, ultimately, higher retention rates. This metric, along with the others, allows for a comprehensive analysis of AI's influence on student success and motivation within the educational environment.

Evaluating the efficiency of AI-supported learning resources is also important. Metrics such as the time students spend actively engaged with learning materials and the completion rates of assigned tasks are valuable insights. By measuring these metrics, we can gauge the effectiveness of different AI-powered tools in optimizing the learning process and ensuring students are spending their time productively.

Finally, measuring the reduction in student drop-out rates is crucial. AI tools, by providing personalized support and targeted interventions, can help students stay engaged and motivated, leading to a decrease in the dropout rate. Tracking this metric provides a strong indicator of the positive impact AI has on student persistence and overall success in their educational journey.