Ethical Considerations and Future Directions

Data Privacy and Security

Protecting the privacy of student data is paramount in AI-driven educational research. Robust data anonymization techniques and strict adherence to ethical guidelines are crucial to prevent unauthorized access and misuse. Researchers must obtain informed consent from participants, ensuring they understand how their data will be used and stored. Furthermore, clear protocols for data security and handling must be established and meticulously followed throughout the research process, from data collection to analysis and disposal.

Implementing secure cloud storage solutions and employing encryption techniques are essential steps in safeguarding sensitive information. This includes not only student data but also any personally identifiable information (PII) of teachers, administrators, or other stakeholders involved in the research. Transparency about data handling practices is vital to building trust with all parties involved and fostering a culture of responsible data management.

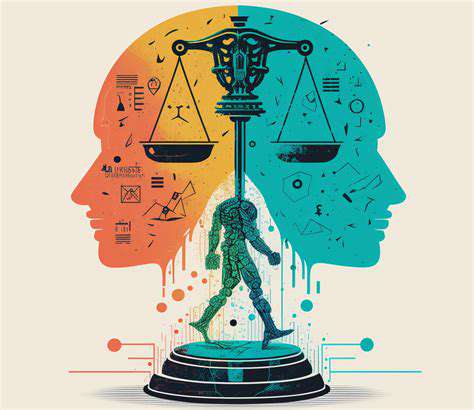

Bias Mitigation and Fairness

AI algorithms are only as good as the data they are trained on. If the training data reflects existing societal biases, the resulting AI models can perpetuate and even amplify those biases in educational contexts. Researchers must actively address potential biases in their datasets to ensure equitable outcomes for all students. This requires careful data curation, analysis, and potentially the implementation of techniques to identify and mitigate bias during the model development process.

Techniques such as fairness-aware learning algorithms can be employed. These methods aim to minimize the disparate impact of AI systems on different student groups. Moreover, ongoing monitoring and evaluation of the AI model's performance across various student demographics are crucial to identify and address any emerging biases in real-world applications.

Transparency and Explainability

Understanding how AI models arrive at their conclusions is critical for building trust and ensuring accountability in educational research. Black box models, where the decision-making process is opaque, can be problematic in educational settings where clear explanations are often necessary for educators and parents to understand and interpret the outcomes. Researchers should strive for transparency in their methods and model development.

Explainable AI (XAI) techniques can offer insights into the reasoning behind AI predictions. This allows for greater comprehension of the model's decision-making process, enabling educators to better understand how the AI model supports their students and identify potential areas for improvement. Developing and implementing XAI methods is essential for building trust and ensuring responsible use of AI in education.

Accountability and Responsibility

Establishing clear lines of accountability is essential when AI systems are used in educational research. Who is responsible for the model's outputs and potential errors? Researchers, institutions, and developers all have a shared responsibility to ensure ethical use and address any negative consequences of AI implementation. Processes for handling errors and making adjustments are crucial to maintaining the integrity of the research process and minimizing harm.

Mechanisms for oversight and review are important to ensure that AI systems are used ethically and in a way that benefits all stakeholders. Independent review boards and ethical guidelines should be in place to address concerns and facilitate responsible development and deployment of AI in education.

Accessibility and Inclusivity

AI tools and educational resources should be accessible to all students, regardless of their socioeconomic background, learning style, or disability. Ensuring accessibility is a critical ethical consideration in AI-driven educational research. Research should consider the diverse needs of learners and strive to create AI systems that are inclusive and equitable for all. The design and implementation of AI solutions must be inclusive, considering the needs of learners with disabilities and various learning styles.

Careful consideration of language barriers, cultural differences, and technical literacy levels are also vital. Providing support and resources to ensure that diverse populations can benefit from AI applications is essential for achieving equitable outcomes and promoting effective learning for all.

Long-Term Impact and Societal Implications

The long-term impact of AI on education needs careful consideration. How will AI systems affect the nature of teaching and learning in the future? Researchers need to consider the potential consequences of AI-driven interventions, examining how they might shape the future of education and the workforce. This includes exploring the possibility of AI systems replacing certain roles, potentially leading to job displacement or requiring individuals to adapt to new skill sets.

The potential for AI to personalize learning experiences and improve outcomes for all students is significant. However, it is crucial to ensure that these benefits are realized while mitigating potential harms and fostering an inclusive and equitable educational system for all. Long-term monitoring and evaluation of AI's impact are essential to guide future developments and ensure responsible implementation.