Understanding the Importance of Cleaning

Data cleaning, a critical initial step in any text analysis project, involves systematically identifying and removing or modifying inconsistencies, errors, and irrelevant information within the text data. This process is essential because raw text data often contains various forms of noise, including typos, irrelevant characters, and formatting inconsistencies. Ignoring these issues can lead to inaccurate analysis and unreliable results. Thorough cleaning ensures that the subsequent steps, like feature extraction and model training, are performed on a more accurate and reliable dataset.

Handling Missing Values

Missing values in text data can arise from various sources, such as data entry errors, incomplete records, or issues during data collection. Addressing these gaps effectively is crucial for maintaining data integrity. Strategies for handling missing values in text data often include imputation techniques, where missing values are estimated based on available data. Alternatively, records with missing values might be removed, though this approach should be carefully considered as it could lead to a loss of valuable information. Understanding the context and nature of missing values is key to selecting the most appropriate handling strategy.

Dealing with Noise and Irrelevant Data

Raw text data frequently contains noise, such as special characters, HTML tags, and irrelevant symbols. This noise can interfere with the accuracy and effectiveness of subsequent analysis steps. Text cleaning techniques are necessary to address this issue, focusing on removing or replacing these unwanted elements. This process involves identifying patterns of noise and applying appropriate cleaning methods to filter out these unwanted components while preserving the essential information within the text.

Normalization Techniques for Consistency

Normalization is a crucial aspect of data preprocessing, aiming to standardize the text data for consistency. This involves transforming different forms of the same data into a uniform format. For instance, converting all text to lowercase, handling different abbreviations or slang, and standardizing punctuation usage, contributes to a more consistent and manageable dataset. Normalization ensures that the analysis is not biased by variations in the text format, leading to more accurate and reliable results.

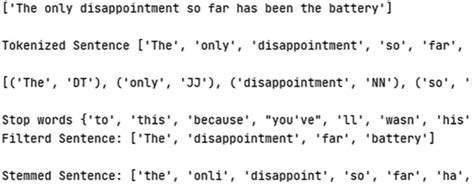

Tokenization: Breaking Down the Text

Tokenization, a fundamental text preprocessing step, involves dividing the text into individual units, known as tokens. These tokens can be words, phrases, or even characters. This process is crucial for preparing text data for various analyses. Appropriate tokenization strategies depend on the specific analysis task. Choosing the right tokenization strategy depends on the specific analysis task. For example, in sentiment analysis, a word-level tokenization might be suitable, while for n-gram analysis, a more sophisticated approach might be required.

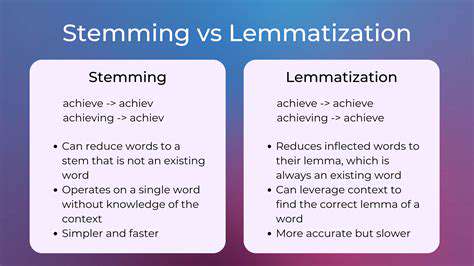

Stop Word Removal and Stemming/Lemmatization

Stop word removal and stemming/lemmatization are techniques used to reduce the dimensionality of the text data by removing common words (stop words) and reducing words to their root forms. This process helps to focus on the most significant words in the text, improving the efficiency and effectiveness of downstream tasks. Stop words like the, a, and is often do not provide significant meaning and are frequently removed. Stemming, on the other hand, reduces words to their root form, while lemmatization aims to produce the dictionary form of a word.