Establishing Robust Data Security Measures

Implementing Robust Access Control

Effective data security hinges on stringent access control measures. These measures should define and enforce who can access specific data, what actions they can perform on that data, and when they can access it. Implementing granular permissions is crucial, ensuring that only authorized personnel have access to sensitive information. This approach minimizes the risk of unauthorized access and subsequent data breaches.

Strong authentication protocols are paramount. Multi-factor authentication (MFA) is a critical component of any robust access control system. It adds an extra layer of security, requiring users to verify their identity through multiple channels (e.g., password, security token, biometric scan). This significantly reduces the risk of unauthorized access, even if an attacker gains one form of login credential.

Employing Encryption Techniques

Data encryption is a fundamental security practice. Transforming data into an unreadable format before storage or transmission protects it from unauthorized access, even if intercepted. Employing robust encryption algorithms and key management procedures is essential. This is a critical step in safeguarding sensitive information against potential threats.

Encrypting data both in transit and at rest is vital. Encryption in transit protects data during transmission over networks, while encryption at rest safeguards data stored on servers or devices. This dual approach ensures comprehensive protection.

Regular Security Audits and Assessments

Proactive security audits and assessments are essential for identifying vulnerabilities and weaknesses in a system. Regular checks can pinpoint potential entry points for attackers, enabling proactive mitigation strategies to prevent breaches. This systematic approach ensures the ongoing effectiveness of security measures. These audits should encompass not only the system itself but also the processes and procedures that govern data handling.

Developing and Implementing Security Policies

A clearly defined security policy is a cornerstone of a robust security framework. This policy should outline specific rules and guidelines for handling sensitive information, outlining the responsibilities of personnel and the necessary protocols for data protection. This policy serves as a guide and reference point for all employees involved in data handling. A comprehensive security policy should address data classification, access control, incident response, and disaster recovery.

Maintaining an Up-to-Date Security Posture

The threat landscape is constantly evolving, requiring continuous adaptation of security measures. Regular updates to security software, systems, and procedures are critical for maintaining a strong security posture. Staying informed about the latest threats and vulnerabilities is essential to proactively address emerging risks. Failure to adapt to evolving threats leaves organizations vulnerable. This includes regular software patching, security training for employees, and continuous monitoring of system logs.

Developing Mechanisms for Bias Detection and Mitigation

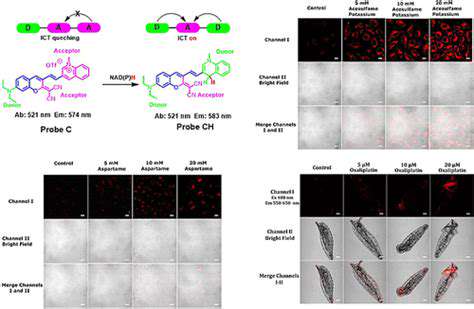

Understanding the Sources of Bias in AI Systems

AI systems, particularly those trained on vast datasets, can inherit and perpetuate biases present in those datasets. These biases can stem from various sources, including societal prejudices embedded in historical data, skewed representation of demographics, or even unintentional human error in data collection and labeling. Understanding the potential sources of bias is crucial for developing effective mitigation strategies. For example, if an image recognition system is trained primarily on pictures of light-skinned individuals, it may struggle to accurately identify individuals with darker skin tones, leading to unfair or inaccurate outcomes. This highlights the importance of diverse and representative datasets for training AI models.

Furthermore, algorithmic design itself can introduce bias. Certain algorithms may be more susceptible to amplifying existing biases in the data, or their inherent structure may inadvertently favor certain outcomes over others. Therefore, it's essential to analyze the algorithms themselves, not just the data they are trained on, to uncover potential biases. Careful consideration of the potential for bias at all stages of AI development, from data collection to model deployment, is critical to building ethical and equitable AI systems.

Developing Robust Detection Mechanisms

Effective bias detection mechanisms are essential for identifying and quantifying potential biases in AI systems. These mechanisms should encompass various techniques, including statistical analysis to identify disparities in model performance across different demographic groups, and qualitative assessments to evaluate the potential societal impact of the AI system. For instance, analyzing the accuracy of a loan application system across different racial or ethnic groups can reveal potential bias in the model's predictions. Thorough evaluation and testing of AI systems are crucial steps in the process.

A critical aspect of bias detection is the development of metrics that accurately reflect the presence and severity of bias. These metrics should be carefully designed and validated to provide meaningful insights into the system's behavior. For example, a metric focusing on the disparity in loan approval rates between different demographic groups might be a key indicator for bias detection. These metrics are not only crucial for identifying bias but also for monitoring the effectiveness of mitigation strategies.

Implementing Mitigation Strategies

Once biases are detected, appropriate mitigation strategies must be implemented. These strategies might involve adjusting the training data to better represent diverse populations, using algorithmic techniques to counter specific biases, or modifying the model architecture to minimize the amplification of existing biases. This could involve rebalancing datasets, using adversarial training methods, or incorporating fairness constraints into the optimization process. For example, adjusting the training data by adding more images of individuals with darker skin tones can help improve the performance of an image recognition system for this demographic.

Transparency and explainability are also crucial components of effective mitigation strategies. Understanding how an AI system arrives at a particular decision can help identify potential biases and allow for more targeted interventions. Techniques for explainable AI (XAI) can be employed to make the decision-making process more transparent and accountable, allowing for greater scrutiny and mitigation of biases.

Ensuring Ethical Considerations in AI Governance

The development of effective mechanisms for bias detection and mitigation requires a holistic approach that encompasses ethical considerations in AI governance. This involves establishing clear guidelines and regulations for the development, deployment, and use of AI systems, ensuring that fairness, accountability, and transparency are prioritized throughout the lifecycle of the AI system. For example, establishing regulatory frameworks for data collection and usage can help mitigate potential biases in the data used to train AI systems. These frameworks should also consider the potential impacts of the AI systems on various groups within society, ensuring equitable outcomes.

Furthermore, fostering ongoing dialogue and collaboration between stakeholders, including policymakers, researchers, industry professionals, and the public, is paramount. This collaborative approach is vital for establishing best practices for bias detection and mitigation and ensuring that AI systems are developed and deployed responsibly and ethically. This necessitates a continuous process of evaluation, improvement, and adaptation to the evolving landscape of AI technology.

Promoting Collaboration and Public Engagement

Fostering Transparency and Trust

Transparency in AI systems is crucial for building public trust and ensuring ethical data handling. Clear explanations of how AI models work, the data they use, and the potential biases they may exhibit are essential. This transparency allows stakeholders to understand the decision-making processes and hold developers accountable for the ethical implications of their work. Open communication and readily available documentation are key components of this approach, promoting a sense of security and allowing for public scrutiny of AI systems.

Developing robust mechanisms for auditing and evaluating AI systems is also vital. This includes establishing clear guidelines and standards for data collection, processing, and use. Independent audits can help identify potential biases, errors, or vulnerabilities, ensuring that AI systems operate ethically and avoid unintended consequences.

Establishing Clear Ethical Guidelines

Creating comprehensive ethical guidelines for AI development and deployment is paramount. These guidelines should address issues like data privacy, bias mitigation, accountability, and potential societal impacts. The guidelines must be regularly reviewed and updated to reflect evolving societal values and technological advancements. This continuous review process ensures that the ethical considerations remain relevant and effective in addressing emerging challenges.

Organizations developing and deploying AI systems should proactively incorporate ethical considerations into their design and implementation processes. This includes careful consideration of potential biases in training data, methods for mitigating those biases, and procedures for addressing any unintended consequences.

Encouraging Public Participation in AI Governance

Active public participation is critical for shaping ethical AI governance. This includes creating platforms and mechanisms for public feedback, concerns, and suggestions. Open forums, online surveys, and public consultations can facilitate a dialogue between stakeholders and developers, fostering a shared understanding of the ethical implications of AI.

Engaging diverse communities and perspectives is crucial. This encompasses ensuring representation from various backgrounds and experiences to ensure that the ethical guidelines and policies adequately address the needs and concerns of all segments of society. This inclusive approach promotes fairness and prevents AI systems from inadvertently perpetuating existing societal inequalities.

Promoting Interdisciplinary Collaboration

Collaboration between experts in AI, ethics, law, and social sciences is essential for developing robust and effective AI governance frameworks. Bringing together diverse perspectives allows for a more comprehensive understanding of the ethical, societal, and technical implications of AI. This interdisciplinary collaboration is crucial for developing frameworks that are both technically sound and socially responsible.

Cross-disciplinary teams can help identify potential risks and unintended consequences of AI systems, and develop strategies to mitigate these issues. This collaborative approach will result in AI systems that are not only effective but also ethical and beneficial to society as a whole.

Ensuring Accountability and Responsibility

Establishing clear lines of accountability for AI developers, deployers, and users is vital for ethical data handling. This includes defining roles and responsibilities, establishing mechanisms for redress, and implementing appropriate sanctions for violations of ethical guidelines. Robust mechanisms for redress and recourse are vital for individuals or groups who are negatively impacted by AI systems.

Facilitating Education and Awareness

Raising public awareness about the ethical implications of AI is crucial for fostering responsible development and deployment. This includes educational initiatives, public lectures, and accessible resources that explain how AI works, its potential benefits and risks, and the importance of ethical considerations. Education and awareness programs should be widely available and easily accessible to the public, helping individuals understand the role of AI in their daily lives and how to engage in meaningful conversations about its future.

Promoting critical thinking about AI among different segments of the population can empower individuals to make informed decisions about its use and shape its development in a responsible manner. This broad awareness campaign will foster a more informed and engaged public in shaping ethical AI governance.