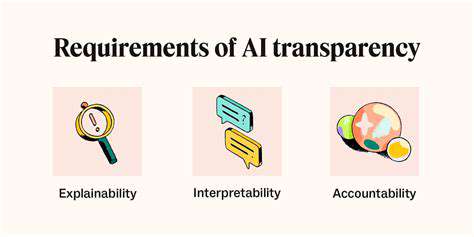

Transparency and explainability are crucial elements in modern data-driven decision-making. Data visualizations, particularly those focused on complex algorithms, need to be clear and understandable to ensure trust and accountability. This allows stakeholders to comprehend the reasoning behind predictions and outcomes, fostering confidence in the system and empowering them to make informed decisions.

Explainable AI (XAI) techniques are increasingly employed to bridge the gap between complex models and human understanding. These methods aim to provide insights into the decision-making process of machine learning models, enabling users to understand how a particular prediction was reached. This level of transparency is particularly important in critical applications such as healthcare and finance where decisions can have significant consequences.

Without transparency, it's difficult to identify biases or errors in the model. This can lead to unfair or inaccurate outcomes, diminishing the value and potentially jeopardizing the trust placed in the system.

A variety of methods are used to increase transparency and explainability, including feature importance analysis, decision trees, and rule-based systems. These approaches provide different levels of detail and insight, allowing users to tailor the level of explanation to their specific needs. Understanding the reasons behind a prediction is essential for identifying potential problems and making adjustments to improve the model's performance.

Effective communication of complex information is essential for fostering trust and understanding. Visualizations play a crucial role in presenting data insights in a clear and accessible manner. Well-designed visualizations can simplify intricate relationships and identify patterns that might be missed with textual descriptions alone. Clear and concise communication is key to ensuring that stakeholders understand the implications of the findings and can act accordingly.

The importance of explainability extends beyond technical considerations. It has significant implications for ethical and societal considerations. Transparency in algorithms can help identify and mitigate potential biases, ensuring fairness and equity in decision-making processes. This is especially critical in sensitive domains like lending, hiring, and criminal justice.

Robust methods for evaluating the effectiveness of transparency and explainability are essential. Metrics should be developed to assess the clarity, accuracy, and comprehensiveness of explanations. These assessments should consider the specific context and intended audience, ensuring that the explanations are appropriate and meaningful. This iterative process of evaluation and improvement is crucial for continuous enhancement and refinement of these techniques.

Privacy and Data Security: Protecting Sensitive Information

Protecting User Data in AI Systems

A crucial aspect of ethical AI development is the robust protection of user data. AI systems often rely on vast quantities of personal information to function effectively, from identifying patterns in medical records to recommending products tailored to individual preferences. This necessitates a commitment to data privacy, ensuring that sensitive information is handled responsibly and securely throughout the entire lifecycle of the AI system, from collection to analysis and storage. This includes implementing strong encryption protocols, access controls, and data anonymization techniques to prevent unauthorized access and misuse.

Organizations developing and deploying AI systems must prioritize transparency in their data handling practices. Users should have clear and accessible information about how their data is being collected, used, and protected. This includes outlining the specific purposes for data collection, the categories of data collected, and the potential risks associated with data breaches. Furthermore, individuals should have the right to access, correct, and delete their personal data, giving them control over their information within the context of the AI system.

Ensuring Data Security Throughout the AI Lifecycle

Data security is not limited to the initial collection stage; it must be maintained throughout the entire lifecycle of the AI system. This encompasses secure storage methods, robust access controls, and regular security audits to identify and mitigate potential vulnerabilities. Protecting data from unauthorized access, breaches, and malicious attacks is paramount for maintaining user trust and complying with relevant regulations, such as GDPR and CCPA. Implementing multi-factor authentication and employing encryption techniques for data transmission and storage are crucial steps in achieving comprehensive data security.

Regular security assessments and vulnerability scans are essential components of a comprehensive data security strategy. These assessments help identify potential weaknesses in the system and allow for proactive remediation before a breach occurs. Proactive security measures, combined with a strong incident response plan, are key to ensuring swift and effective action in case of a data security incident. This includes having clear procedures for detecting, containing, and recovering from data breaches, minimizing the impact on users and maintaining the integrity of the system.

Addressing Bias and Fairness in Data-Driven AI

A significant ethical consideration is the potential for bias in data-driven AI systems. If the training data reflects existing societal biases, the AI system may perpetuate and even amplify these biases in its outputs, leading to unfair or discriminatory outcomes. Therefore, developers must critically evaluate the data used to train AI models, ensuring it is representative and free from bias. This includes actively identifying and addressing potential biases in the data collection process and implementing strategies to mitigate their impact on the AI system's output.

Techniques for detecting and mitigating bias in data are crucial. This involves analyzing the data for patterns of discrimination or disparities, and implementing algorithms and methods that can identify and reduce these biases. Transparency in the model's decision-making process is also important, allowing users and stakeholders to understand how the AI system arrives at its conclusions, enabling them to identify and address potential biases. This transparency aids in the development of fairer, more equitable AI systems. Continuous monitoring and evaluation are essential for ensuring fairness and addressing any emerging biases in the system's outputs over time.

Responsible AI Deployment and Monitoring: Beyond the Initial Launch

Defining Responsible AI Deployment

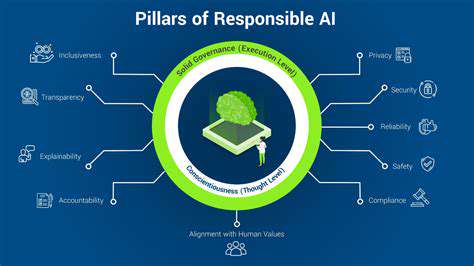

Responsible AI deployment goes beyond simply building and deploying AI systems. It encompasses a multifaceted approach that prioritizes ethical considerations, fairness, transparency, and accountability throughout the entire AI lifecycle, from design and development to implementation and ongoing monitoring. This proactive approach is crucial for mitigating potential harms and ensuring that AI systems benefit society as a whole. It necessitates a deep understanding of the potential biases inherent in data and algorithms, and a commitment to addressing them effectively.

A key aspect of responsible AI deployment is establishing clear guidelines and standards for ethical AI development. These guidelines should address issues like data privacy, algorithmic transparency, and potential societal impacts. Adhering to these principles is essential for building trust in AI systems and ensuring their responsible use.

Monitoring AI Systems for Bias

Ongoing monitoring of AI systems is critical for detecting and mitigating biases that may emerge over time. This includes analyzing the system's outputs and comparing them to ground truth data to identify potential discrepancies and patterns of unfair treatment. Monitoring tools and methodologies are essential for ensuring the fairness and equity of AI systems.

Regular audits and evaluations of the AI system's performance are needed to assess the impact of the system on different user groups and identify potential areas for improvement. This iterative process of monitoring and adjusting is key to maintaining the fairness and effectiveness of the AI system.

Ensuring Algorithmic Transparency

Transparency in AI algorithms is crucial for understanding how decisions are made and for identifying potential biases. Clear documentation of the data used, the algorithms employed, and the rationale behind specific decisions is essential. This facilitates scrutiny and accountability, allowing stakeholders to understand the reasoning behind AI-driven outcomes.

Explaining the decision-making process of AI systems is essential for building trust and encouraging responsible use. By providing clear and understandable explanations, we can foster greater confidence and acceptance of AI in various applications.

Addressing Potential Societal Impacts

AI systems can have significant societal impacts, and responsible deployment requires careful consideration of these potential ramifications. This includes anticipating potential job displacement, the impact on marginalized communities, and the broader implications for societal values and norms. Proactive engagement with stakeholders and communities affected by AI systems is essential to ensure that these systems are deployed in a way that benefits all members of society.

Thorough impact assessments and ongoing dialogue with affected communities are crucial. This proactive engagement helps identify potential problems and formulate mitigation strategies.

Promoting Responsible AI Development Practices

Promoting ethical AI development practices across organizations and industries is a crucial step toward responsible AI deployment. This involves fostering a culture of ethical awareness among AI developers and implementing robust guidelines and standards for AI system development. This includes incorporating ethical considerations into the entire AI development lifecycle, from the initial design phase to the final deployment stage.

Training and education programs for AI developers and practitioners are essential to promote responsible AI development. Effective training programs can equip professionals with the necessary skills and knowledge to develop, deploy, and monitor AI systems ethically and responsibly.