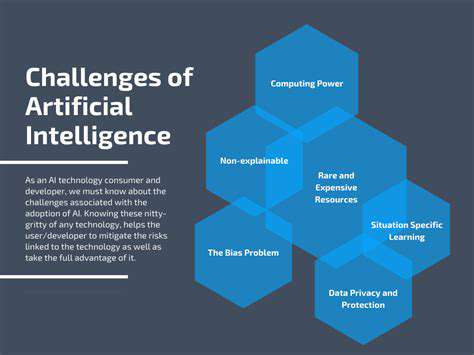

Data Privacy and Security

Protecting patient data is paramount in AI healthcare applications. Robust security measures and strict adherence to privacy regulations like HIPAA are crucial. AI systems must be designed with data encryption, access controls, and anonymization techniques to prevent unauthorized access, breaches, and misuse of sensitive patient information. This requires meticulous attention to detail throughout the entire AI development lifecycle, from data collection and storage to algorithm implementation and deployment.

Furthermore, the increasing reliance on interconnected systems for AI healthcare necessitates a comprehensive approach to data security, encompassing not only individual patient records but also the integrity of the entire healthcare infrastructure. This includes protecting against cyberattacks and ensuring compliance with evolving data privacy standards.

Bias and Fairness in Algorithms

AI algorithms are trained on data, and if that data reflects existing societal biases, the AI system will likely perpetuate and even amplify those biases in its diagnoses, treatment recommendations, and resource allocation. This can lead to disparities in healthcare access and outcomes for certain demographic groups. Careful consideration must be given to the data used for training and the potential for bias within the algorithms.

Rigorous testing and validation procedures, including diverse datasets and independent audits, are essential to identify and mitigate these biases. Continuous monitoring and evaluation of AI systems in real-world healthcare settings will also be critical to ensuring equitable outcomes for all patients.

Explainability and Transparency

Understanding how AI systems arrive at their conclusions is crucial for trust and accountability in healthcare. Black box algorithms, where the decision-making process is opaque, raise concerns about clinicians' ability to trust and interpret the results. Developing explainable AI (XAI) techniques that provide insight into the reasoning behind AI-driven decisions will be essential for building confidence and fostering adoption of these technologies.

Clinicians need to understand the rationale behind AI recommendations to integrate them effectively into their practice. This transparency will allow for better collaboration between humans and AI, leading to more informed decisions and improved patient care. Explainability also facilitates the identification and correction of errors within the AI system.

Regulatory Frameworks and Governance

Developing comprehensive regulatory frameworks for AI in healthcare is essential to ensure safe and ethical implementation. These frameworks should address issues such as data privacy, algorithmic bias, liability, and accountability. Clear guidelines and standards are needed to ensure that AI systems are developed and deployed responsibly and ethically, with patient safety and well-being at the forefront.

Collaboration between policymakers, healthcare professionals, AI developers, and ethicists is crucial in shaping these regulatory frameworks. Ongoing dialogue and adaptation to emerging technologies are necessary to maintain a robust and ethical approach to AI in healthcare. Furthermore, the frameworks must evolve with advancements in AI technology to maintain relevance and effectiveness.

Job Displacement and Workforce Adaptation

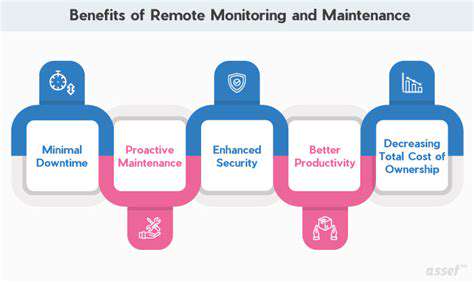

The integration of AI into healthcare may lead to changes in the roles and responsibilities of healthcare professionals. While AI can automate certain tasks, freeing up human resources for more complex and patient-centric activities, it also presents potential challenges for the healthcare workforce. Strategies for retraining and upskilling healthcare professionals to work alongside AI are needed to ensure a smooth transition and maximize the benefits of AI adoption.

Potential for Malicious Use

The potential for malicious use of AI in healthcare, such as the creation of sophisticated medical fraud or the development of harmful AI-generated diagnoses, needs careful consideration. Security measures and safeguards must be implemented to prevent such misuse and protect the integrity of the healthcare system. Robust cybersecurity protocols and ethical guidelines are essential to mitigate these risks and maintain public trust in AI applications.

Continuous monitoring and evaluation of AI systems, along with the development of robust detection mechanisms for malicious activities, are crucial for ensuring the safe and responsible use of AI in healthcare. This includes establishing mechanisms for reporting and investigating potential misuse of AI systems.