Popular Pre-trained Models and Architectures

Key Pre-trained Models

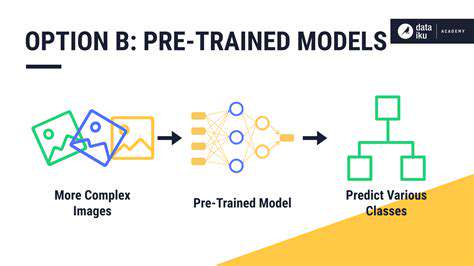

Pre-trained models have revolutionized various fields, particularly in natural language processing (NLP). These models, trained on massive datasets, offer a strong foundation for downstream tasks. They allow us to leverage existing knowledge and expertise, significantly reducing the need for extensive data collection and training for new applications. Their efficiency and effectiveness have made them indispensable tools in modern AI development. This pre-training phase essentially learns general linguistic patterns, which can then be fine-tuned for specific tasks.

Examples include BERT, GPT, and RoBERTa, each with its own strengths and weaknesses. Understanding these differences is crucial for choosing the appropriate model for a specific NLP task. Each model has been meticulously crafted to excel in different areas, from text classification to machine translation and question answering. This variety ensures that there's a model suited for nearly any need within the NLP domain.

Model Architecture Insights

Different pre-trained models employ various architectural designs. Understanding these architectures is essential for comprehending how these models function and for selecting the right one for a particular application. For example, transformers, a common architecture, leverage attention mechanisms to process input sequences. This allows the model to focus on relevant parts of the input text, leading to more accurate and meaningful interpretations.

The specific design choices often dictate the model's strengths and limitations. Factors like the number of layers, the type of embeddings used, and the attention mechanism employed all contribute to the model's performance. Analyzing these architectural details can significantly aid in model selection and optimization.

Fine-tuning Techniques

Fine-tuning is a crucial step in leveraging pre-trained models. It involves adapting the pre-trained model to a specific downstream task, often with a relatively smaller dataset. This process allows the model to learn task-specific patterns and improve its performance on the target application. The fine-tuning process typically involves adjusting the model's weights to better align with the characteristics of the new data. This process ensures the model's relevance to its intended use.

Applications of Pre-trained Models

Pre-trained models have found broad applications across various domains. Their use in natural language understanding tasks, such as sentiment analysis and text summarization, is widespread. These models are frequently integrated into chatbots, virtual assistants, and other conversational AI systems. This capability has profoundly impacted the development of user-friendly and intelligent interfaces.

In addition to NLP, pre-trained models are increasingly used in computer vision and other fields. Their versatility and efficiency make them powerful tools for tackling a wide range of complex problems.

Evaluation Metrics for Arc

Assessing the performance of pre-trained models, especially in the context of an Arc (which is likely an abbreviation for a specific algorithm or technique), requires careful consideration of appropriate evaluation metrics. Choosing the right metrics is crucial for a fair and accurate comparison of different models. These metrics should align with the specific goals and objectives of the task. For instance, accuracy might be suitable for classification tasks, while precision and recall might be more appropriate for tasks involving the detection of rare events.

The selection of metrics should be guided by the nature of the data and the intended use of the model. Understanding these factors is essential for ensuring that the evaluation process reflects the true capabilities and limitations of the pre-trained model.

Case Studies and Applications in Real-World NLP

Analyzing Customer Churn with R

Understanding customer churn is crucial for businesses to retain valuable clients and mitigate financial losses. This case study explores the use of R to analyze customer data and identify factors contributing to churn. By employing statistical modeling techniques, such as logistic regression and decision trees, we can pinpoint the key characteristics of customers who are at risk of leaving. This analysis allows businesses to develop targeted retention strategies and improve customer lifetime value.

R's powerful libraries, like `caret` and `e1071`, provide tools to build predictive models that accurately forecast churn. The insights gained from these models can inform targeted marketing campaigns and personalized customer service interactions, ultimately leading to a more successful customer retention program.

Predicting Stock Prices with Time Series Analysis in R

Financial institutions and investors often use R to analyze historical stock price data and predict future trends. This case study demonstrates how time series analysis techniques in R, such as ARIMA modeling and exponential smoothing, can be applied to forecast stock prices. The analysis involves cleaning and preparing the data, which is a critical step in ensuring the accuracy of the models.

By identifying patterns and trends in the data, we can develop models that provide insights into potential future price movements. These models can also be used to inform investment strategies and risk management decisions, potentially leading to more profitable investment opportunities.

Sentiment Analysis of Social Media Data with R

Social media platforms generate vast amounts of data, and sentiment analysis can provide valuable insights into public opinion and brand perception. This case study explores how R can be used to analyze social media data and quantify the sentiment expressed in user-generated content. Libraries like `tm` and `syuzhet` are essential for processing and analyzing text data to extract meaningful insights from this textual data.

Sentiment analysis using R can be applied to monitor brand reputation, track customer feedback, and identify potential issues before they escalate. These insights can guide businesses in making informed decisions related to product development, marketing strategies, and customer service.

Genome-Wide Association Studies (GWAS) with R

Biomedical research often uses R for analyzing complex biological data, such as genomic data. This case study focuses on the application of R in genome-wide association studies (GWAS). The analysis involves identifying genetic variations associated with specific traits or diseases. This process requires advanced statistical methods and careful data handling, which R excels at.

R's statistical capabilities are invaluable in extracting meaningful patterns from large datasets, leading to a better understanding of genetic mechanisms. This knowledge can be instrumental in developing new treatments and therapies for various diseases.

Analyzing Customer Segmentation using R

Understanding customer segments is crucial for targeted marketing and personalized service. This case study demonstrates how R can be used to segment customers based on their characteristics and behaviors. Techniques such as k-means clustering and hierarchical clustering are employed to group customers with similar profiles.

This analysis allows businesses to tailor their marketing strategies and product offerings to specific customer groups, maximizing their effectiveness. By identifying distinct customer segments, businesses can optimize their resources and improve customer satisfaction.

Predicting Website Traffic with R

Website traffic prediction is vital for businesses to effectively manage server resources, plan marketing campaigns, and anticipate demand. This case study examines the use of R to analyze website traffic data and forecast future traffic trends. R's statistical modeling capabilities and time series analysis tools can be applied to predict website traffic accurately.

Accurate predictions can help businesses optimize their website infrastructure and allocate resources effectively. By understanding website traffic patterns, businesses can proactively address potential bottlenecks and improve the overall user experience.

Financial Risk Assessment with R

Assessing financial risk is a critical aspect of managing investments and ensuring financial stability. This case study showcases how R can be used to analyze financial data and assess various risk factors. Techniques such as Monte Carlo simulations and regression analysis allow for a comprehensive risk assessment.

Accurate risk assessment using R's capabilities can help businesses make informed decisions, mitigate potential financial losses, and maintain financial stability. The results of these analyses can be used to develop robust risk management strategies and ensure the long-term success of financial institutions.