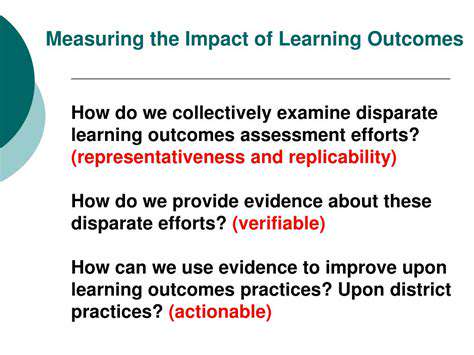

Data Preprocessing Techniques for Bias Reduction

Data Cleaning and Transformation

Data cleaning is a crucial step in bias reduction, as it involves identifying and correcting inconsistencies, errors, and inaccuracies within the dataset. This includes handling missing values, removing duplicates, and correcting typographical errors. These steps can significantly impact the accuracy and fairness of the resulting model. Proper data cleaning helps eliminate biases from inconsistencies in the data, such as inconsistent recording of demographic information or errors in measurement tools. By ensuring data integrity, we establish a foundation for a fairer and more accurate analysis.

Data transformation techniques like normalization and standardization can also mitigate bias. Normalization scales data to a specific range, while standardization centers and scales data to have zero mean and unit variance. These processes prevent features with larger values from disproportionately influencing the model, which is critical when dealing with features like income and age.

Feature Engineering for Bias Detection

Feature engineering is essential for identifying and mitigating bias in datasets. It involves creating new features, selecting relevant ones, and potentially removing or transforming features that might introduce bias. For example, if a dataset contains customer purchase information, feature engineering could create a feature representing the average purchase amount per customer segment. This can reveal biases in spending patterns across different groups.

Careful consideration is needed to avoid introducing new biases during feature engineering. For instance, creating a feature based on a biased existing feature can perpetuate the bias. A thorough understanding of the data and potential biases is crucial for building fair and accurate models.

Handling Imbalanced Datasets

Many real-world datasets exhibit class imbalances, where certain classes are significantly underrepresented. This imbalance can lead to biased models favoring the majority class, potentially misclassifying the minority class. Techniques like oversampling the minority class or undersampling the majority class can mitigate this bias. These methods aim to create a more balanced representation of classes, allowing the model to learn from all classes effectively and fairly.

Data Augmentation Techniques

Data augmentation techniques can mitigate bias when datasets are limited or skewed. By creating synthetic data points, these techniques can increase the representation of underrepresented groups. For example, in a loan application dataset with bias against women, data augmentation can create synthetic loan applications from women applicants, effectively increasing their representation.

Careful consideration must be given to ensure synthetic data does not perpetuate or amplify biases. This involves selecting augmentation techniques and parameters to ensure the generated data accurately reflects underlying patterns without introducing new biases.

Bias Detection and Evaluation Metrics

Identifying and evaluating biases in datasets and models is crucial for developing fairer AI systems. Appropriate metrics can quantify bias in aspects like demographic representation, feature distributions, and model predictions. For example, disparity in loan approval rates for different demographic groups can be quantified using metrics like the disparate impact ratio.

By employing these metrics, we gain a better understanding of the types and extent of bias present. This knowledge is essential for developing strategies to reduce bias and create more equitable AI systems. Monitoring and evaluating these metrics throughout the entire data pipeline ensures ongoing fairness and accuracy.

Ethical Considerations and Responsible AI Development

Fairness and Bias Detection

AI systems trained on large datasets can inadvertently perpetuate societal biases, leading to discriminatory outcomes in areas like loan applications, criminal justice, and hiring. Identifying these biases is crucial, as they can manifest subtly. Developing robust methods for detecting and quantifying biases is paramount for ethical AI development. This requires careful consideration of the training data and the AI system's impact on demographic groups.

Techniques like statistical analysis and algorithmic auditing can uncover hidden biases within AI models. It's essential to go beyond evaluating accuracy and delve into the data's potential for skewed representation. Incorporating diverse perspectives during development can lead to more holistic and equitable AI solutions.

Transparency and Explainability

Understanding how an AI system makes decisions is vital for building trust and accountability. A lack of transparency makes it difficult to identify errors or biases, leading to a lack of confidence in the system. Explainable AI (XAI) techniques provide insights into the decision-making process of AI models, making them more understandable and trustworthy. This is crucial for high-stakes applications with substantial consequences.

Developing methods for explaining AI predictions can involve visualizing model components, identifying key features influencing predictions, and providing human-readable explanations. Transparency fosters trust and enables better understanding of the system's limitations, allowing for informed human oversight.

Accountability and Governance

Establishing clear lines of accountability is essential for ensuring AI systems are developed and deployed responsibly. Who is responsible when an AI system makes a harmful decision? This requires careful consideration of stakeholders' roles, from developers and deployers to users and regulators. Clear guidelines and regulations are necessary to ensure ethical practices and mitigate potential harms.

Creating robust governance frameworks that address AI system risks is paramount. This includes establishing mechanisms for oversight, redress, and enforcement of ethical standards. Regular audits and evaluations of AI systems are crucial to identify and address potential biases, errors, and unintended consequences. Effective governance structures ensure the long-term sustainability and ethical development of AI technologies.