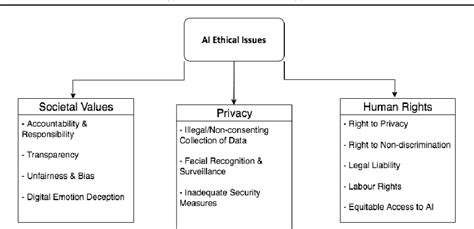

Understanding Algorithmic Bias

Algorithmic bias in AI refers to systematic and repeatable errors in a machine learning model that stem from skewed data sets. These biases manifest as unfair or discriminatory outcomes, often reflecting societal prejudices embedded within the training data. Recognizing and mitigating these biases is crucial for ensuring fairness and ethical use of AI systems.

AI models are trained on data, and if that data reflects existing societal biases, the model will likely perpetuate them. This can lead to unfair or discriminatory outcomes in areas like loan applications, hiring processes, and even criminal justice.

Data Collection and Bias

The quality and representativeness of the data used to train AI models are paramount. If the data collection process is flawed or biased, the resulting model will inherit those biases. For example, if a dataset used to train a facial recognition system predominantly contains images of people of one race, the model might perform poorly or inaccurately on images of people from other racial groups.

Biased data can lead to inaccurate or unfair predictions, making it important to scrutinize the source and composition of the data used to train AI algorithms.

Bias in Feature Selection

The features used to train a model can also introduce bias. If certain features are disproportionately weighted or if some features are excluded due to preconceived notions, the model's ability to make fair and unbiased predictions will be affected. Carefully considering which features are relevant and how they are treated is vital for preventing bias.

Bias in Model Training and Evaluation

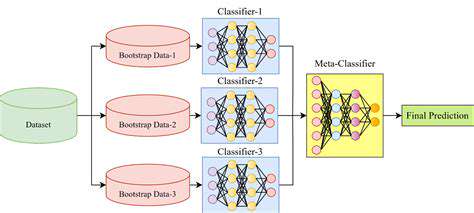

The training process itself can also introduce bias. Different training algorithms might amplify or mask biases in the data, depending on their design and implementation. Additionally, the evaluation metrics used to assess the model's performance might inadvertently favor certain outcomes over others, leading to biased results.

Mitigating Bias in AI Algorithms

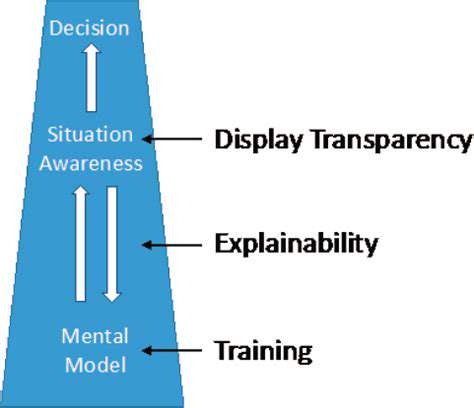

Addressing algorithmic bias requires a multi-faceted approach. This includes careful data collection and analysis to identify and correct any biases present in the data. Furthermore, diverse teams involved in model development and evaluation can help identify and prevent biases from creeping into the process. Transparency and explainability in AI models are also critical to understanding how biases might be manifesting and how to mitigate them.

Ethical Considerations and Responsible AI Development

The ethical implications of algorithmic bias are profound. The use of AI systems in high-stakes domains like criminal justice, healthcare, and finance demand careful consideration of the potential for bias to impact individuals and groups. Developers have a responsibility to prioritize fairness and equity in the design, implementation, and deployment of AI systems. This includes ongoing monitoring and evaluation to identify and correct any emerging biases.

Privacy Concerns and Data Security: Safeguarding Consumer Information

Protecting Personal Information in the Digital Age

In today's interconnected world, personal data is increasingly vulnerable to breaches and misuse. Individuals and organizations alike must prioritize robust data security measures to safeguard sensitive information. This includes implementing strong encryption protocols and access controls to prevent unauthorized access and protect user privacy. Data breaches can have devastating consequences, ranging from financial losses and identity theft to reputational damage and legal repercussions.

Data Collection Practices and User Consent

Understanding how organizations collect, use, and share personal data is crucial for maintaining user trust. Transparency in data practices is essential, and individuals should be clearly informed about what data is being collected, how it will be used, and with whom it will be shared. Obtaining explicit consent from users before collecting or processing their personal data is paramount. This requires clear and concise language that users can easily understand.

The Role of Encryption in Data Security

Encryption plays a vital role in safeguarding sensitive information. It transforms data into an unreadable format, making it virtually impossible for unauthorized individuals to access or decipher it. Implementing robust encryption technologies is critical for protecting data transmitted over networks and stored on devices. This ensures that even if a data breach occurs, the compromised information remains indecipherable without the decryption key.

The Importance of Access Control Measures

Implementing strict access control measures is essential to limit access to sensitive data to authorized personnel only. This includes multi-factor authentication, role-based access controls, and regular security audits. By carefully controlling access, organizations can significantly reduce the risk of unauthorized access and data breaches. Regularly reviewing and updating access controls is crucial to maintain effectiveness.

The Impact of Data Breaches on Individuals and Organizations

Data breaches can have severe consequences for both individuals and organizations. For individuals, it can lead to financial losses, identity theft, and emotional distress. Organizations face significant financial penalties, reputational damage, and legal liabilities. The costs associated with data breaches can be substantial, impacting not only the immediate victims but also the broader community. Implementing proactive security measures to prevent breaches is significantly more cost-effective than dealing with their repercussions.

Addressing the Rise of Cyber Threats

The threat landscape is constantly evolving, with cybercriminals developing new and sophisticated techniques to exploit vulnerabilities. Organizations must stay ahead of these threats by continuously updating their security protocols and investing in advanced threat detection and response systems. Staying informed about emerging threats and vulnerabilities is crucial for effectively mitigating risks. Proactive security measures and regular training for employees are essential in preventing data breaches.

The Legal and Ethical Considerations of Data Privacy

Data privacy regulations, such as GDPR and CCPA, are becoming increasingly stringent. Organizations must comply with these regulations to avoid legal penalties and maintain public trust. Ethical considerations also play a critical role in data handling, demanding responsible and transparent practices. Understanding and adhering to legal and ethical guidelines is essential for maintaining a secure and trustworthy digital environment. This includes being mindful of the potential impact of data collection and use on individuals and society.

The Future of AI Ethics in Marketing: A Collaborative Approach

Defining AI Ethics in Marketing

AI ethics in marketing encompasses a broad range of considerations, from ensuring fairness and transparency in algorithms used for customer segmentation and targeted advertising to avoiding the perpetuation of harmful biases within AI systems. This includes the crucial need to understand how AI tools might inadvertently discriminate against certain demographics or amplify existing societal prejudices. A key element is designing and implementing AI systems that respect and uphold fundamental human rights and values, ensuring equitable access to products and services for all consumers.

Transparency and Explainability in AI Marketing Tools

Consumers deserve clear and understandable explanations about how AI systems influence their experiences. This means providing transparent mechanisms for consumers to understand the decision-making processes behind personalized recommendations, targeted ads, and other AI-driven marketing strategies. Explainability in AI marketing is not merely a technical requirement; it's a crucial aspect of building trust and fostering a positive customer experience.

Lack of transparency can lead to distrust and potentially regulatory scrutiny. Developing methods for explaining complex AI decision-making processes in a simple and accessible way is vital for building public confidence and ethical use of AI in marketing.

Bias Mitigation in AI-Powered Marketing Strategies

AI systems can inherit and amplify existing biases present in the data they are trained on. This can lead to discriminatory outcomes in marketing, potentially marginalizing certain demographics. Addressing this necessitates proactive efforts to identify and mitigate biases in training data, algorithm design, and model evaluation processes. This includes diverse representation in the datasets used to train AI systems and the careful consideration of potential biases in the design of AI-driven marketing campaigns.

Accountability and Responsibility in AI Marketing Decisions

Determining accountability and responsibility for errors or harmful outcomes stemming from AI marketing tools is crucial. Clear lines of responsibility need to be established within organizations employing AI, ensuring that individuals or teams are accountable for the ethical implications of their AI-driven decisions. This includes mechanisms for redress if consumers are negatively affected by AI-generated marketing content or actions.

The Role of Human Oversight in AI Marketing

While AI can automate many marketing tasks, human oversight remains essential. Humans should be actively involved in monitoring AI systems, identifying potential biases, and ensuring compliance with ethical guidelines. This proactive human involvement is essential to maintain human control and ensure that AI systems are used ethically within the context of marketing practices. Organizations need to establish clear guidelines for human intervention in AI-driven marketing decisions.

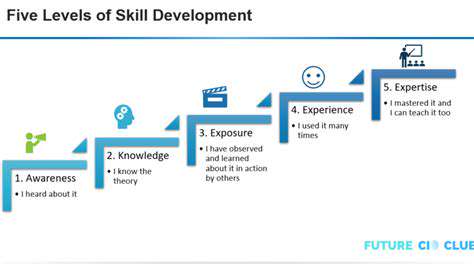

Collaboration and Education for Ethical AI Development

Developing ethical AI in marketing requires collaboration between technologists, marketers, ethicists, and policymakers. Organizations need to engage in open dialogues and discussions about AI ethics to establish shared understanding and best practices. Providing education and training to marketing professionals about AI ethics is crucial for ensuring that AI systems are implemented responsibly and ethically. This includes understanding how AI systems can be used to promote inclusivity and address societal inequalities.