Deep Learning Architectures for Market Forecasting

Deep Learning Architectures for Machine Learning

The evolution of deep learning has transformed machine learning by creating advanced models that tackle intricate challenges. These architectures mimic the human brain's structure, using interconnected artificial neuron layers. Every layer modifies input data in specific ways, gradually uncovering higher-level features. This layered approach enables models to identify complex data patterns, resulting in exceptional performance across various applications.

The primary deep learning frameworks are Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Generative Adversarial Networks (GANs). Each serves distinct data types and purposes. CNNs specialize in analyzing grid-based data like images and videos, whereas RNNs handle sequential information such as text and time series effectively. GANs, in contrast, focus on creating new data samples that mirror training data.

Key Components of Deep Learning Architectures

Activation functions play a pivotal role in these architectures. They introduce non-linearity, allowing models to grasp sophisticated data relationships. Without them, deep learning systems would function like basic linear regression models, severely restricting their potential.

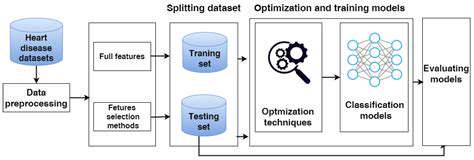

The training phase adjusts network weights and biases to align predictions with actual outcomes. Optimization techniques like stochastic gradient descent refine these parameters systematically to enhance performance.

Architecture selection and configuration, including layer count and neuron arrangement, significantly impact model effectiveness. Thoughtful planning of these elements is vital for achieving desired precision and operational efficiency.

Applications of Deep Learning Architectures

These architectures find utility across multiple fields. In computer vision, CNNs perform image classification, object identification, and image segmentation tasks. They've dramatically enhanced accuracy and speed compared to conventional techniques.

For language processing, RNNs and similar models facilitate machine translation, sentiment evaluation, and text creation. These advancements have propelled our ability to comprehend and utilize human language.

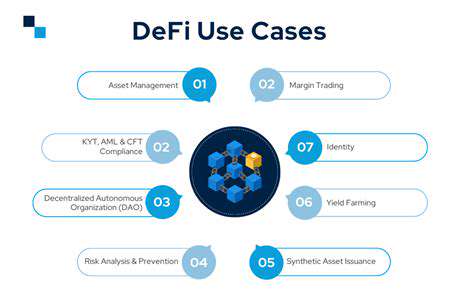

Beyond these areas, deep learning aids medical imaging analysis, fraud identification, and financial prediction. The adaptability and strength of these models make them indispensable for solving complex challenges in various industries.

Reinforcement Learning for Adaptive Trading Strategies

Understanding Reinforcement Learning

Reinforcement learning (RL) represents a dynamic machine learning approach where systems develop optimal strategies through environmental interaction. For adaptive trading approaches, RL facilitates automated decision-making, responding to market changes and improving investment outcomes continuously. This method contrasts sharply with conventional rule-based systems that often falter with financial markets' complexity and volatility.

At RL's core is the learning agent that improves through experimentation. The agent engages with market conditions, receiving positive or negative feedback for its actions. Through continuous refinement, the agent learns to maximize benefits, equivalent to profitable market decisions. This repetitive learning mechanism forms the foundation of RL's flexibility.

Key Components of RL in Trading

Several elements contribute to RL's effectiveness in trading. These include environmental definition, reward function specification, and appropriate algorithm selection. The environment incorporates market data like historical prices, trade volumes, and sentiment indicators. The reward function critically evaluates action effectiveness, balancing returns against risks. Various RL algorithms, including Q-learning and deep reinforcement learning, provide different methods for developing optimal strategies based on environmental and reward parameters.

Adapting to Market Volatility

Financial markets naturally fluctuate, requiring trading strategies to adjust accordingly. RL algorithms thrive in such conditions, dynamically modifying positions in response to market shifts. This adaptability proves essential for risk management and opportunity capture during volatile phases. For instance, an RL system might alter trade size or frequency based on observed volatility, minimizing losses during downturns while maximizing gains during favorable trends.

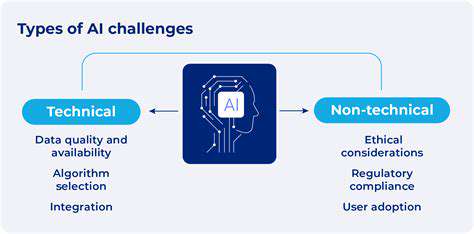

Overcoming Implementation Challenges

Applying RL to trading presents multiple obstacles. Accurately modeling complex financial environments poses one significant difficulty. Another challenge involves acquiring sufficient historical market data, which can be costly to gather and process. Additionally, RL model interpretability raises concerns, as understanding the rationale behind decisions isn't always straightforward. Addressing these issues requires careful algorithm selection, data quality consideration, and reward function design.

Ethical Implications and Future Prospects

As RL-driven trading systems advance, ethical considerations grow increasingly pertinent. Potential market manipulation risks and inequality reinforcement demand careful examination. Future developments should prioritize creating more transparent and understandable RL models to ensure ethical AI application in finance. Moreover, combining RL with other AI technologies like sentiment analysis could yield more sophisticated and responsive trading strategies moving forward.