Choosing the Right Evaluation Metrics

Choosing the Right Evaluation Metrics for Your Project

Selecting the appropriate evaluation metrics is crucial for any project, ensuring that the results accurately reflect the project's goals and intended outcomes. This careful selection is paramount to avoid misinterpreting data and making incorrect conclusions. A poorly chosen metric can lead to wasted resources and flawed decisions. Understanding the nuances of various metrics is essential for making informed choices.

Different evaluation metrics are suitable for different types of projects. A metric that works well for a website's user engagement might not be appropriate for assessing the performance of a machine learning model.

Understanding the Project's Objectives

A deep understanding of the project's objectives and the specific goals it aims to achieve is essential for choosing the right evaluation metrics. Clearly defining the desired outcomes is the foundation upon which accurate and meaningful evaluations are built. This includes identifying the key performance indicators (KPIs) that will help measure progress towards achieving those goals.

Considering the specific context of the project is vital. For instance, a project focused on increasing customer retention might use different metrics compared to a project focused on improving conversion rates.

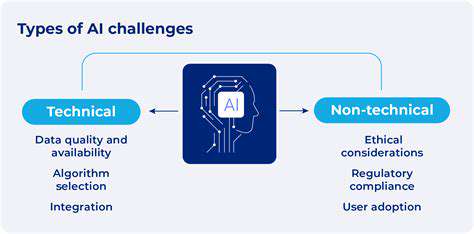

Considering Different Types of Metrics

There are various types of evaluation metrics, each with its strengths and weaknesses. Understanding these differences is essential for choosing the most appropriate metric for your specific needs. Quantitative metrics provide numerical data, while qualitative metrics provide descriptive information. Knowing which type of metric aligns with your goals is important.

Examples of different metric types include accuracy, precision, recall, F1-score, and mean average precision. Each of these metrics provides a different perspective on the performance of a system or process.

Analyzing Data Characteristics

The characteristics of the data being evaluated play a significant role in determining the appropriate metric. For example, if the data is highly skewed, using a simple average might not be the best approach. Consider the distribution of the data, its potential outliers, and the overall quality of the data in your selection process. This ensures the chosen metric accurately reflects the underlying trends and patterns.

Data characteristics such as the presence of missing values, outliers, or different data formats may necessitate specific evaluation metrics that can handle these nuances.

Considering the Resources Available

The resources available to collect and analyze data significantly influence the feasibility of using certain evaluation metrics. The time, budget, and expertise required for data collection and analysis should be carefully weighed when selecting metrics. A complex metric may not be feasible if the resources are limited.

Choosing a metric that is easy to implement and requires minimal resources can significantly impact the project's timeline and budget.

Evaluating the Feasibility of Implementation

The feasibility of implementing a chosen metric must be thoroughly assessed. Ensuring the metric can be realistically implemented within the project's constraints is crucial. This includes considering the technical infrastructure, expertise, and time required for data collection, analysis, and reporting. Inaccurate or misleading results can arise if the implementation is not well-planned and executed.

The ability to collect the necessary data and accurately measure the chosen metric should be evaluated before finalizing the selection.

Cross-Validation Techniques

Understanding the Importance of Cross-Validation

Cross-validation is a crucial technique in machine learning for evaluating the performance of a model. It allows us to assess how well a model generalizes to unseen data, a critical aspect of building reliable and robust machine learning systems. Instead of relying solely on a single train-test split, cross-validation provides a more comprehensive and statistically sound evaluation by using multiple train-test splits. This approach significantly reduces the risk of overfitting and provides a more accurate estimate of the model's performance on new, unseen data.

By partitioning the data into multiple subsets and training and testing the model on different combinations of these subsets, cross-validation helps to identify potential biases and variations in the model's performance. This iterative process offers a more reliable picture of the model's true capabilities, allowing for more informed decisions about model selection and tuning.

K-Fold Cross-Validation

K-fold cross-validation is a widely used technique. It divides the dataset into K mutually exclusive subsets, often called folds. The model is trained on K-1 folds and tested on the remaining fold. This process is repeated K times, with each fold acting as the test set exactly once. The results from each fold are then averaged to obtain a more robust estimate of the model's performance.

The choice of K is important. A higher K (e.g., 10-fold or even 100-fold) generally leads to a more accurate estimate of the model's generalization performance but might require more computational resources. However, a small K (like 5-fold) can be used for faster results, especially when dealing with large datasets.

Leave-One-Out Cross-Validation (LOOCV)

LOOCV is a specific case of K-fold cross-validation where K equals the number of data points in the dataset. In each iteration, a single data point is held out as the test set, and the model is trained on all the remaining data points. This process is repeated for each data point.

LOOCV provides a very accurate estimate of the model's performance because it utilizes every data point as a test set. However, it's computationally expensive, especially with large datasets, as it requires training the model multiple times, once for each data point in the dataset.

Stratified K-Fold Cross-Validation

When dealing with datasets that have imbalanced class distributions, standard K-fold cross-validation might not adequately represent the class proportions in the test sets. This is where stratified K-fold cross-validation comes in. It ensures that the proportion of each class in each fold mirrors the proportion in the entire dataset. This is crucial for models that are sensitive to class imbalances, such as those used for classification tasks.

This method helps prevent the model from being overly biased towards one class, leading to a more reliable evaluation of the model's performance, especially in datasets where one class is significantly underrepresented.

Cross-Validation for Time Series Data

Time series data presents unique challenges for cross-validation, as the order of the data points is critical. Standard K-fold cross-validation methods might not capture the temporal dependencies within the data. Techniques like rolling or sliding window cross-validation are often employed. In rolling window cross-validation, a fixed-size window moves through the data, with the model trained on the data preceding the window and tested on the data within the window.

Sliding window cross-validation is similar but allows the window to overlap, providing a more continuous assessment of the model's performance over time. These techniques are essential for evaluating models designed to forecast or predict future values in time series data.

Choosing the Right Cross-Validation Technique

The optimal cross-validation technique depends on the specific characteristics of the dataset and the model being evaluated. Factors like dataset size, class distribution, temporal dependencies, and computational resources should be considered when making the selection. Understanding the strengths and limitations of each technique is crucial for obtaining reliable and meaningful results.

Careful consideration of the chosen method will ensure that the evaluation accurately reflects the model's ability to generalize to unseen data, contributing to the development of more robust and effective machine learning models.