The Imperative for AI Governance in a Fair Society

The Ethical Considerations of AI

Artificial intelligence (AI) systems, while offering remarkable potential, also present significant ethical challenges. As AI algorithms become more sophisticated and integrated into various aspects of our lives, it becomes increasingly crucial to consider the potential for bias, discrimination, and unintended consequences. Addressing these ethical concerns is paramount to ensuring that AI systems are developed and deployed in a way that benefits all members of society, promoting fairness and avoiding harm.

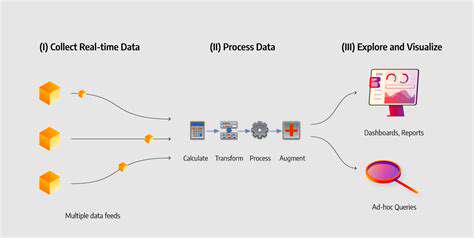

The potential for bias embedded within training data can lead to discriminatory outcomes in areas like loan applications, hiring processes, and even criminal justice. Developing robust mechanisms for identifying and mitigating bias in AI models is essential to building trust and ensuring equitable outcomes. This requires careful consideration of data sources, algorithm design, and ongoing monitoring and evaluation of AI systems in operation. Furthermore, transparency in the workings of AI algorithms is vital for fostering public understanding and accountability.

Establishing Clear Regulatory Frameworks

The rapid advancement of AI necessitates a proactive approach to establishing clear and comprehensive regulatory frameworks. These frameworks should not stifle innovation, but rather provide a safety net to mitigate risks and ensure responsible development and deployment of AI technologies. Regulations should address issues like data privacy, algorithmic transparency, accountability for AI-driven decisions, and potential misuse or unintended consequences.

A key aspect of these regulatory frameworks is the need for clear definitions and standards for what constitutes responsible AI development. This includes establishing guidelines for data collection, processing, and use, as well as standards for algorithm design and evaluation. Furthermore, mechanisms for oversight and enforcement are crucial to ensure that regulations are effectively implemented and that AI systems are used ethically and responsibly.

Promoting Public Engagement and Education

To foster a fair and just society in the age of AI, it's critical to promote public engagement and education. This involves fostering a wider understanding of how AI works, its potential benefits and risks, and the role that individuals can play in shaping its future. Public forums, educational initiatives, and accessible resources can empower citizens to become informed participants in the ongoing conversation about AI governance.

Open dialogue between policymakers, technologists, ethicists, and the public is essential to ensure that AI development aligns with societal values and priorities. By promoting public understanding and participation, we can build trust and ensure that AI systems are deployed in a way that benefits all members of society. This requires actively engaging diverse perspectives and fostering a culture of ethical reflection and debate surrounding AI.

Promoting Inclusivity and Accessibility in AI Development

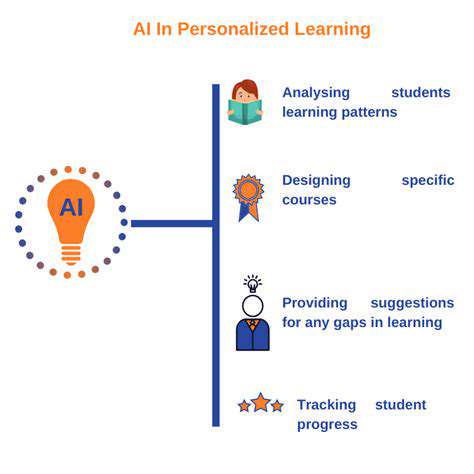

Defining Inclusivity in AI

Inclusivity in AI development extends beyond simply avoiding bias. It necessitates a conscious effort to understand and address the diverse needs and perspectives of all potential users and stakeholders. This involves proactively considering the varied backgrounds, experiences, and abilities of people who will interact with AI systems, from those who design and build the systems to those who use them in their daily lives. A truly inclusive approach recognizes that different individuals and groups might have varying levels of access to technology, varying levels of technical proficiency, and differing cultural norms.

Addressing Algorithmic Bias

Algorithmic bias is a significant concern in AI development. Biased algorithms can perpetuate and even amplify existing societal inequalities. For example, facial recognition systems trained on predominantly white datasets may perform poorly on individuals with darker skin tones. Identifying and mitigating these biases requires careful data analysis, diverse development teams, and rigorous testing procedures. Continuous monitoring and evaluation of AI systems are crucial to ensure fairness and equitable outcomes.

Ensuring Accessibility for All

Accessibility in AI development means designing AI systems that can be used by people with disabilities. This includes considering factors like screen reader compatibility, alternative input methods, and clear and concise interfaces. By incorporating accessibility guidelines from the outset, AI systems can be more inclusive and usable for a wider range of users, ultimately benefiting society as a whole. Accessibility is not just a matter of compliance, it's a fundamental principle for creating responsible AI.

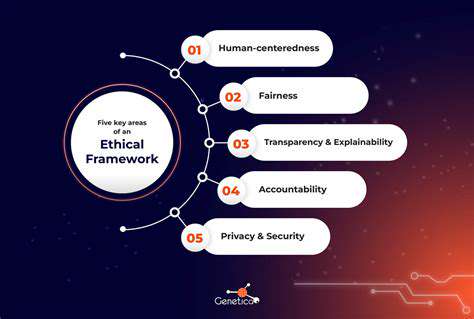

Promoting Ethical AI Development Practices

Ethical AI development practices are essential for building trustworthy and responsible AI systems. These practices encompass transparency, accountability, and fairness. Transparency in AI means making the decision-making processes of algorithms understandable and explainable. Accountability mechanisms are necessary to ensure that developers and users are held responsible for the outcomes of AI systems. Fairness and equity should be central tenets in the design and deployment of AI systems, ensuring that they do not perpetuate existing inequalities.

Fostering Collaboration and Diversity

Collaboration among diverse stakeholders is critical for promoting inclusivity and accessibility in AI. This includes bringing together experts from different fields, including computer science, social sciences, and humanities. Diverse teams can offer a wider range of perspectives and experiences, leading to more innovative and impactful AI solutions. By actively seeking out diverse contributors, developers can enhance the understanding of the needs and challenges faced by different communities. This collaboration can help create AI that is truly beneficial for all.

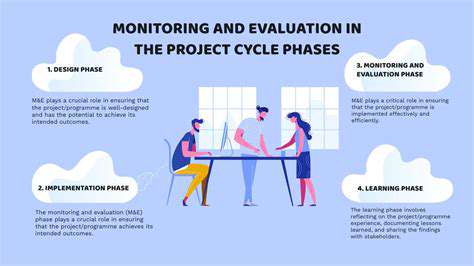

Evaluating and Monitoring AI Systems

Continuous evaluation and monitoring of AI systems are crucial to ensure that they are operating as intended and are not causing harm. Regular assessments of AI systems' performance and impact are necessary to detect and address potential biases, inaccuracies, or unintended consequences. These evaluations should consider the diverse ways in which AI systems might interact with users and society, and should be conducted with the participation of affected communities.

The Role of Regulation and Policy

Appropriate regulations and policies play a vital role in shaping the responsible development and deployment of AI. These guidelines can help establish clear standards for AI development, testing, and use, promoting accountability and inclusivity. Policymakers should work with experts to identify and address potential risks associated with AI while fostering innovation and its benefits for all members of society. The development of appropriate regulatory frameworks is crucial to create a future where AI benefits everyone.